RODSL - Learning Robust Object Detection with Soft-Labels from Multiple Annotators (WiSe 2022/23)

Relying on predictions of data driven models means trusting the ground truth data - since models can only predict what they have learned. But what if the training data is very difficult to annotate since it requires expert knowledge and the annotator might be wrong?

A possible way to target these issues is to annotate data multiple times and extract the presumed ground truth via majority voting. However, there are other methods that use these data more effectively.

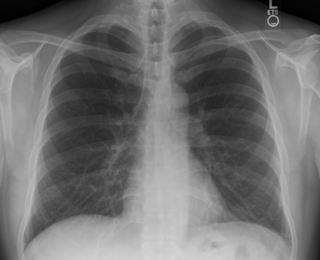

This project will explore existing methods to merge, vote or otherwise extract ground truth from multi-annotated images. For this purpose the VinDr-XCR and TexBiG Dataset are used, since both provide multi-annotated object detection data. Furthermore, deep neural network architectures will be modified and adapted to utilize such data better during training.