Human-Machine Neurosynthesizer

The conceptual development of the Neuro Synthesizer, up until this point, has been processual.

While researching references on Brain Computer Music Interfaces (BCMI), a reasonably recurrent practice in the bridge between Media Art and Science over the last 10 years, I’ve encountered the Neural Network Synthesizer (for Neural Synthesis and Neural Network Plus), which was developed for the electronic music pioneer and long-term John Cage collaborator David Tudor, by Forrest Warthman and Mark Holen, between 1989 and 1994. The Neural Synthesizer was used in several pieces and live performances by Tudor, including the record Neural Synthesis Nos. 6-9. In such works, I find specially interesting the feedback between the sounds, that are fed into each other - input and output.

From the interest of integrating an EEG (Electroencephalogram) sensor with an Audio Synthesis developed in Max/MSP, in order to experiment on developing a BCMI to achieve Brain Controlled Synthesis, I also got first in touch with some basic knowledge on Neuroscience. Already with the intention of developing a Neuro Synthesizer, which would create a communication between different synthesized sounds, each functioning as a Neuron, the concept of a Neural Network made itself elemental. Namely, a Biological Neural Network consists on a network of interconnected neurons, which receive inputs and produce outputs to another neurons based on such inputs.

Still while studying the development of the interface, I was drawn to understand some basic principles on Machine Learning. Finding then a strong parallel between some of the principles of Neuroscience was also a grateful surprise. On a Machine Learning level, the concept of a Neural Network is also present and elemental, as an Artificial Neural Network (ANN), which is one of the training methods of Machine Learning.

Artificial Neural Networks (ANN) are computer systems that learn to perform outputs based on training examples. Such systems are also created from the connection of Neurons, which when connected to another Neurons, carry information between itself through an interconnected system of inputs and outputs. Despite the inspiration on the Biological Neural Networks, the Artificial Neural Networks don’t necessarily function identically to them. In such stage, an attempt to develop a Neural Network on Max/MSP also followed (available on Wiki under Patches section), but was currently discontinued due to the complexity of performing such systems on Max/MSP, which is not the standard environment for it, but which might come to interesting results in future attempts.

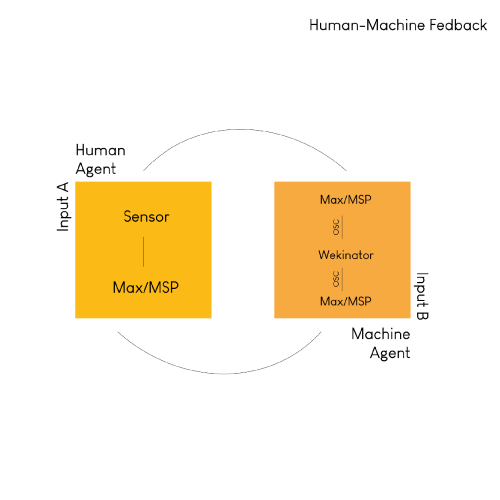

From there, an interest to work with the concept of interconnected so called Neurons to create a Neurosynthesizer out of such system, although a rather simplified one, showed itself an interesting space for the exploration of the Affective possibilities on developing a feedback system with audio synthesis activated by signals from the human body and its response after the process of machine learning, outputting another possibilities out of the first audio synthesis. In this feedback system, both the Human agent and the responsive Machine agent are interconnected and generate a feedback cycle between each other.

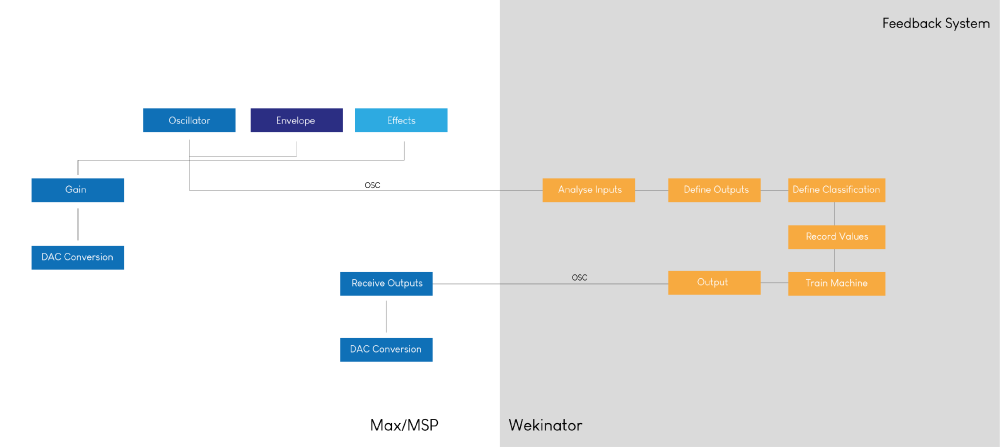

A rather contemporary issue, I’m interested in the potential opportunities and challenges on music creation with Artificial Intelligence - the interconnection between human (s) and technological agent (s). As a time efficient solution, instead of developing the system from scratch, the use of a stand-alone technology demonstrated to be a reasonable alternative. Although up to some level a Black Box, Wekinator, a software developed for the use of Machine Learning for Creative Appliances, seemed a good alternative for exploring creatively a connection between Max/MSP and Machine Learning.

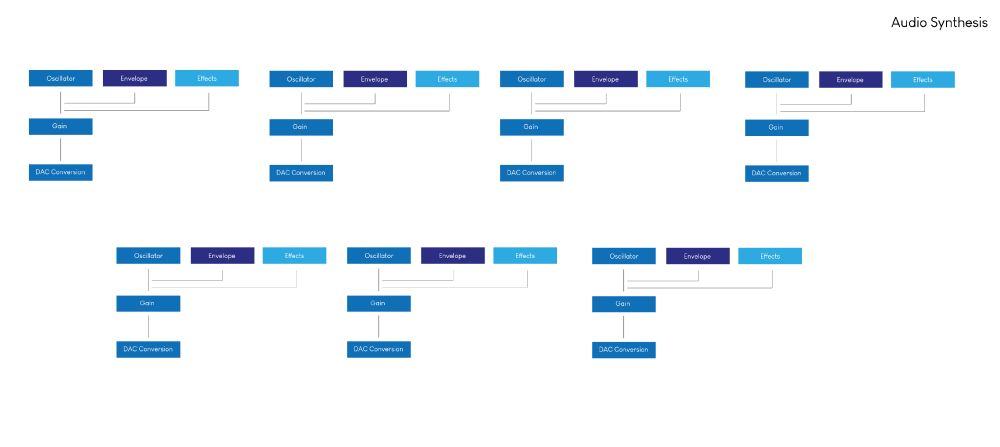

In this framework, the human agent is intended to emit signs from its body, which will be processed through sound synthesis and consist on a Neuron of the system. The synthesis is developed from sine wave oscillators, envelopes and sound effects. The same signals are received by the technological agent, which are processed and sent back to Max MSP after the Machine Learning. The intercommunication happening between the human-technological agents through the music created out of this feedback cycle is the main interest of the work, that is currently developed as an investigation of the affective reaction between human and a technological agent for music creation and the use of AI as a creative tool for music creation.