m (→Sound concept) |

m (→Visual concept) |

||

| Line 25: | Line 25: | ||

== Visual concept == | == Visual concept == | ||

The VR experience will be a slow, fully guided, visual journey of the poem ‘twist’ and position | The VR experience will be a slow, fully guided, visual journey of the poem ‘twist’ and position the poem across and within the space, hereby creating a dynamic, shifting, spatial (sound) experience. | ||

[[:File:twist_poem_pdf.pdf]] | [[:File:twist_poem_pdf.pdf]] | ||

Revision as of 15:11, 29 October 2020

The outset

In an effort of wanting to make the virtuell space more ‘real’ - more tangible somehow - I started out with the intention to bring ‘non-digital’ crafts into the VR space. Additionally I was introduced to the very basics of working with sound through data patches in Pure Data. This soon lead me to a poem titled ‘twist’ by Malina Heinemann. I was especially struck by her spoken word performance of the poetry, which subsequently lead me into considerations about sound, intonation, rhythm and understanding.

Collaborative Concept

As a Virtual Reality experience in experimental sound practice, centering around poetry as spoken word performance, the VR poem is developed in collaboration with the poet, Malina Heinemann, who lives and works in Berlin.

Sound concept

The poem as originally performed by Malina at ‘drift’ in Berlin:

The project will translate poetry into a virtuelle, visuelle experience and investigate spoken-word poetry through computation. The starting point is a curiosity into how pronunciation - constructed through, rhythm, pauses and intonation - can play with language and cognition. The project is based on the poem ‘twist’- as spoken/presented by the poet herself- the style of which will be analysed and relayed through a Data Patch in the visual programming software Pure Data. The set-up of the patches follows the question: (how) Do small shifts in rhythm, tempo, pauses (and pitch) create a unique auditory experience? This generative sound experience is designed to be nuanced and slightly different every time it is played/performed by the algorithm. Just as it would be performed as spoken word by the human poet. The data patch will identify pauses in the poem and randomise them. Hence, pulling words further apart, splitting them in two, or pushing them closer together, creating new sound-word-hybrids in the process, which will be based on the internal logic of the spoken poem as it was originally performed. In effect, this might leave a moment longer to understand, or make it a second harder to catch up with what is being said. Words that carry more weight because of their emphasised intonation might be pitched even lower, or inverse, their emphasis might be lifted completely. Sentences that are spoken quickly might be made even quicker or slowed. By playing with these parameters of spoken word the poem is given a unique performative rhythm each time it is heard, which will (re-)shape the poem itself and influence the comprehensibility for the listener. In the process this will reveal to what degree these elements of spoken language play with language and cognition within the poetic genre. This theme of ‘sound research’ i.e. questioning how we listen i.e. hear, perceive and understand speech is additionally inspired by Diana Deutsch’s research into ‘Musical Illusions and Phantom words’

Visual concept

The VR experience will be a slow, fully guided, visual journey of the poem ‘twist’ and position the poem across and within the space, hereby creating a dynamic, shifting, spatial (sound) experience.

The main visual components (bodies, street elements) are inspired directly by the poem.

dry asphalt

my mind

on this street

the street

pavement

kerbside

through the kerbstone'

tongue

behind your ear

my mouth

inside my head

my brain

your teeth are splitting

your lips are bleeding

my fingers / your fingers

my hand

my eyes / your eyes

my inner surface the edges transformed

tongue-mouth-narrowness

finger-hand-fragmentation

mind-street-realisation

Key-adjectives or visual phrases are selected to help further define the VR space and effects.

it’s twisted

are twitching

are splitting

are transparent

opacity

restless

my sight

was body-less

alienation

transcendence

are distorting

are blurring

be sharp

twisted knot

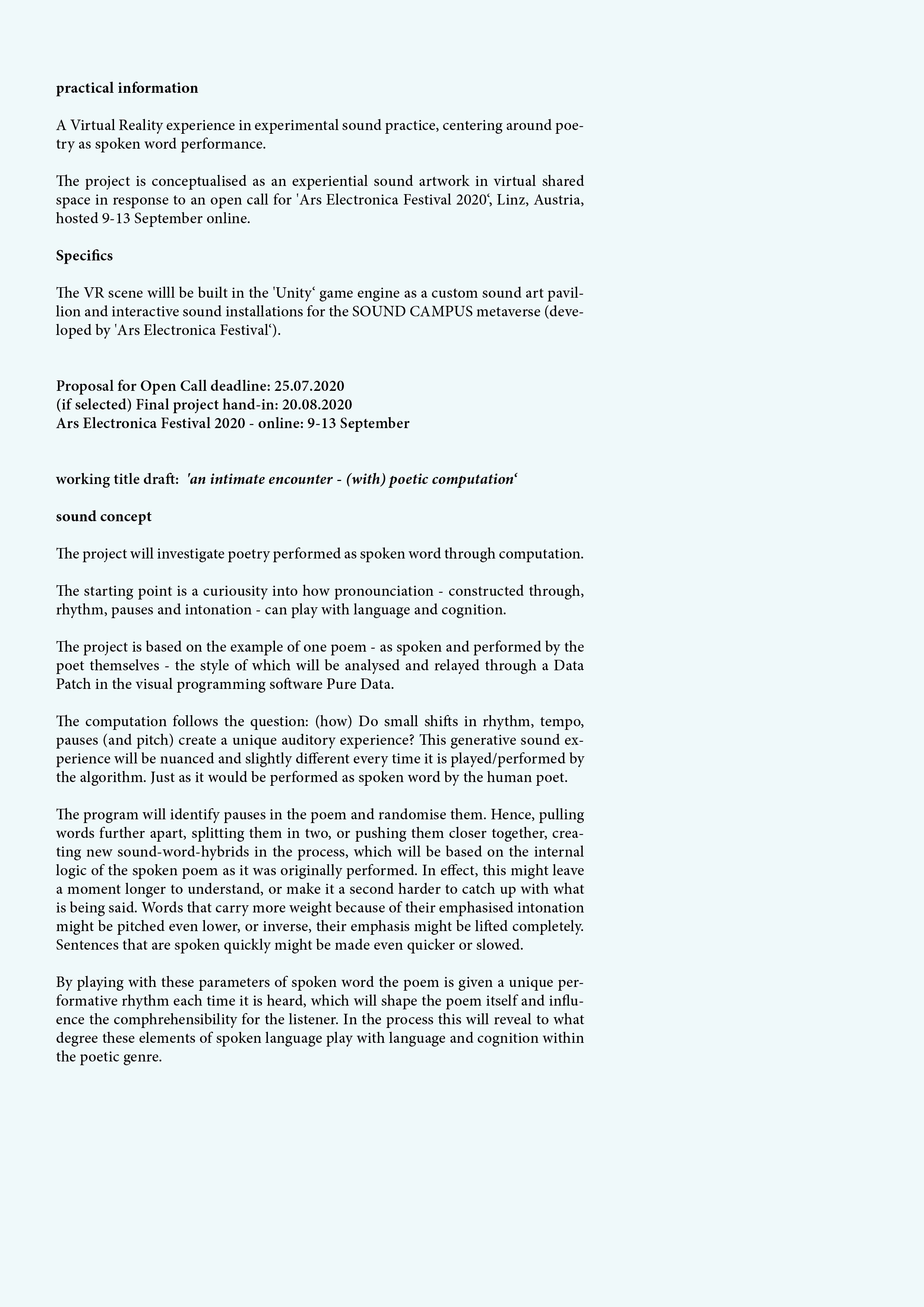

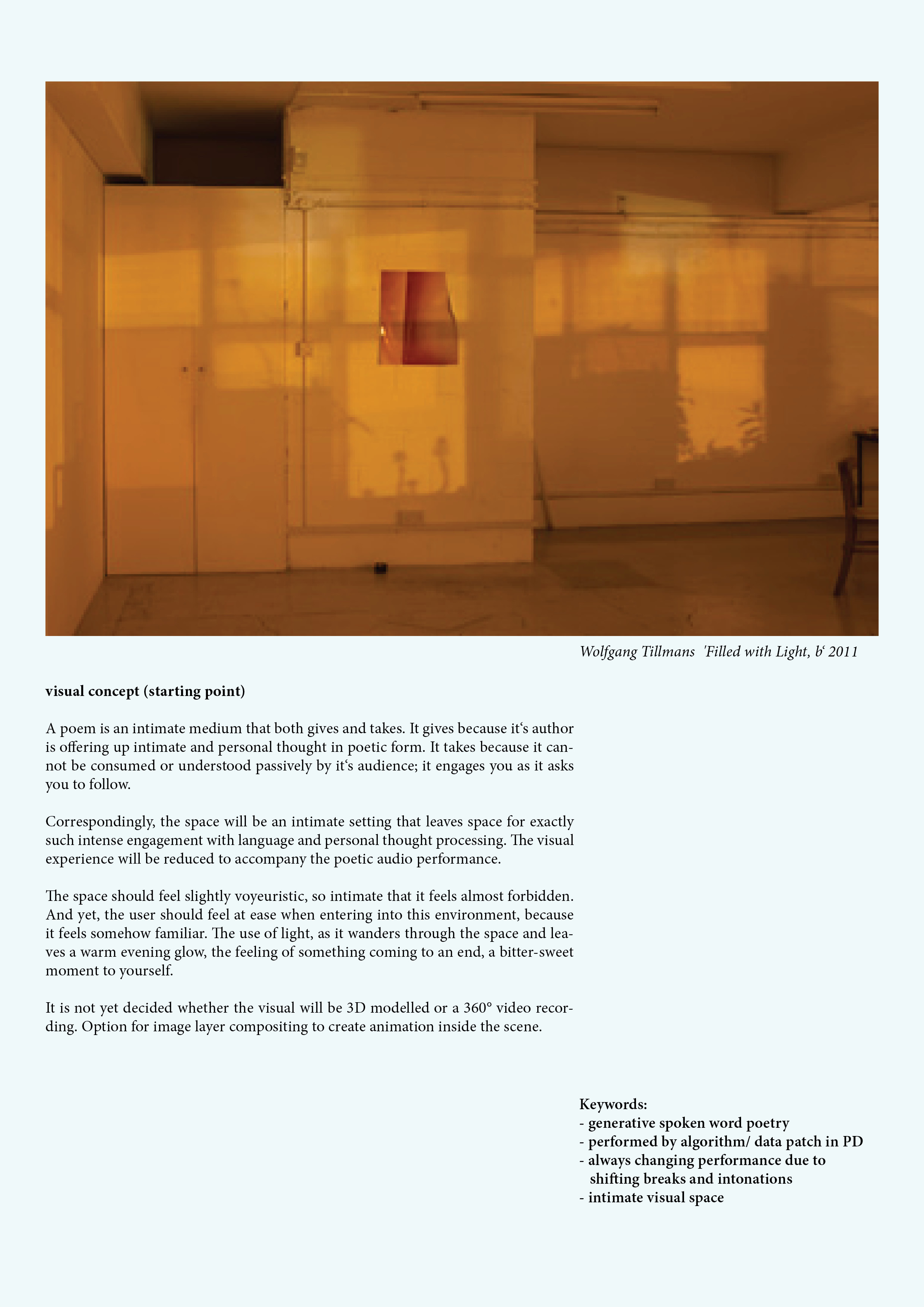

We spoke about the body, about foldings and the simulacra of internal to external realities.

The external symbols of the street, the kerbside, the kerbstone, the dry asphalt all serve as analogies, wherein the outside terminologies are used to illustrate an inner reality.

A conversation between two people? or an internal monologue? A network of selves? A blurring, twisting, twitching back and forth between these selves.

Visual concept outline:

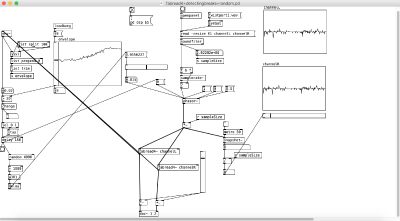

Process: Integration of Pure Data

Patch (Nr. 1) identify pauses and randomise them

Outline what should happen: (1) Poem plays (2) breaks are detected > by volume (3) breaks are extended (between 1-7 seconds) randomly (4) poem keeps playing

Outline how in PD: > loads audiofile with - > define breaks duration as - > identifies breaks by - > randomises and extends the breaks by -

Frequency: 4800 sample rate for 48kHz

Link to Pure Data Patch: File:Tabread4+detectingbreaks+random.pd

In the envelope graph the running sound file is visualised and the breaks are made visible. The detected breaks become the input that sets the new rhythm through a 'random' object.

Patch (Nr. 2) identify intonation (through pitch) and change the pitch

Possible approach 1:

(1) poem plays (2) rises and falls of the tone/inflection of individual words are detected > by: manually pre-defining select words > telling PD where these words are (3) slightly re-pitched to change the emphasis on select words (* but it is important to keep the voice itself intact) (4) poem keeps playing

Possible approach 2:

(1) poem plays (2) rises and falls of the tone/inflection of individual words are detected by pitch (3) slightly re-pitched to change the emphasis on select words (* but it is important to keep the voice itself intact) (4) poem keeps playing

Encountered difficulty: the range I want to alter the pitch in is extremely small

Patch (Nr. 3) randomise tempo of words or phrases

Outline what should happen: (1) poem plays (2) shifts in tempo are detected > by ? (3) subsections/words/phrases are changed (slower, faster) randomly (4) poem keeps playing

Process

This semester I mainly started work on creating my own visual elements, using photogrammetry as technique to transport places, textures and people from the real into the rendered mesh.

I used a range of different photogrammetry softwares: For mobile, quick and relatively small/compact models I used the Trnio app, which uses approx. 80 pictures, which was sufficient for static captures: the street renderings.

During the shoot with (living, breathing, moving) people I used 3 different Softwares to compare and have a choice of results:

When using Trnio to scan people the results vary from recognisable to quite distorted. It is generally more unpredictable but the models are calculated on an external server, which gives very quick and ‘low effort’ results.

Metashape is a great photogrammetry software that creates very detailed point clouds and a realistic texture on the model. However, once creating a dense mesh the solid form (sans texture) has many irregularities and a very ‘bumpy’ surface, most probably resulting from the micro-movements of the models. This results in requiring a lot of cleaning up of the models afterwards.

My personal preference is Agisofts Recap Photo (which sadly only runs on Windows). The software also sends the images to an external server were the models are created quickly. The way the software deals with ‘irregularities’ or missing information results in very organic colour transitions and fluid, soft shapes. Also the way the texture is displayed in the 3D viewport is ideal.

Regrettably, this same quality gets lost when the models are transitioned into a 3D program like Cinema4D or Blender.

I currently like the Two-sided Unity shader asset from Ciconia Studio, which both allows for the external and internal view of the models in Unity and also creates semi-realistic looking textures.

This is one of my main objectives inside VR - to create tangible, almost touchable textures and move further away from the ‘game’ look.

- For the future - when dealing with scanning people - my additional research indicated that a depth sensor that works with rays instead of a batch of photographs is preferable. I’ve additionally learned that when working with people a batch of 100 images shot in rapid succession is better than up to 300 images with detailed shots because the software will obtain to many slight shifts which promote irregularities, in this case it seems: less is more.

The models are cleaned up and sculpted in Cinema4D. I considered working with Meshmixer to combine and ‘mesh’ different models together into a type of hybrid body-street sculpture but currently the main assembly is done directly inside Unity to allow for more flexibility and options to immediately test camera angels.

Inside Unity

Viewer as guided/flying camera

The view of the camera is taken through the bodies, through their edges and foldings. Using Cinemaschine:

Body-street-scapes

The intensity of the external spaces is increased by working with simulating weather conditions that invoke sensory memories of a heatwave, the smell of hot pavement or cooling summer rain on the asphalt. A blurring, wavering heat distortion effect, a damp fog or wet puddles on the pavement.

Skybox

What does it look and feel like to be inside oneself?