No edit summary |

|||

| (27 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

== AN EYE TRACKING EXPERIMENT== | |||

[[/ | |||

{{#ev:youtube|SswayKs9XtE|700}} | |||

This project is an eye-tracking experimentation with Max MSP. My experiment on eye tracking is inspired by historic findings of Alfred Yarbus on eye movements in the 1950s and 1960s. Yarbus’s device was a video-based system. He recorded the close-up videos of the eyes and then he edited the video frame by frame to calculate the gaze tracking data. I experimented with both video footage and web cam to track the eyeball. After targeting eyeballs using cv.jit library externals, adaptive threshold method was used to turn eye image into a white dot on black background. cv.jit is a collection of max/msp/jitter tools for computer vision applications. I used cv.jit.face externals in my in my web cam tracking patch. The most difficult part was to capture eye balls with a web cam due to the low resolution. I used jit.lcd to draw lines based on the data coming from tracking. In the first video, you see a sample of my webcam and video-based eye trackings. | |||

In the second video, you see me looking at my room while tracking my eye movements. During the quarantine, I have stayed at my family house and spent most of my time in my childhood bedroom. I had many moments when my eyes were darting around trying to pull the answer out of my head or searching for a long term memory. Although predominantly externally triggered by the vision, eye movements also occur in cognitive activities. This pattern of eye movement is mostly related to internal thought processes and don’t generally serve visual processing. It is called ‘nonvisual eye movements’ or ‘stimulus independent eye movement’ in most of the recent studies. Why people move their eyes when they are thinking or searching for a long term memory is not clear. | |||

{{#ev:youtube|3PQrkL43iEE |700}} | |||

Later on this page, you will find some useful information regarding the basic types of eye movements, historic findings of Alfred Yarbus and a few sentences about the eye tracking technology used today. You will also find the patches I used in this class work at the end of the page. Please see my research notes below if you even want to read more. | |||

[[:File:unexpected visitor.pdf]] | |||

EYE'S MOVEMENT AND ITS ANATOMY | |||

There are roughly 130 million photoreceptors in the human eye, only in order of a million fibers in the optic nerve carry the signal to the brain. Eye movements are categorized in many different types of motion, two of which are most commonly studied: fixations and saccades. If the eye rests on a specific location for a certain amount of time, this non-movement is classified as a fixation. Fixations are those times when our eyes essentially stop scanning about the scene, holding the central foveal vision in place so that the visual system can take detailed information about what is being looked at. The movement from one fixation to the next is called a saccade. This is the fastest eye movement, in fact, the fastest movement the body can produce, with a duration between 30 to 50 milliseconds. Due to the fast movement during a saccade, the image on the retina is of poor quality and information intake thus happens mostly during the fixation period. | |||

INSPIRATION - Alfred Yarbus’s Eye Tracking Experiment | |||

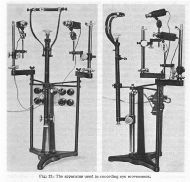

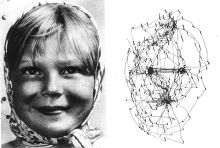

Alfred Yarbus was a Russian psychologist who studied eye movements in the 1950s and 1960s. His book Eye Movements and Vision stands as one of the most cited publications in the area of eye movement and vision. Yarbus recorded observers' eye movements with his homemade gaze tracking suction cap device together with a self-constructed recording device. His findings showed that when different people viewed the same painting, the patterns of eye movements were similar but not identical. He figured out that when we view a complex scene, we show repeated cycles of inspection behavior. During these cycles, the eye stops and examines the most important elements of the picture. For example, when a viewer look at a portrait image The observer eye cycles periodically through a triangle on the eyes, nose, and mouth of the pictured subject. | |||

In his famous experiment, Yarbus asked the same individual to view the same painting seven times, each time with a different instruction before starting to view the image. Yarbus repeatedly studied one painting titled An Unexpected Visitor by the Russian painter Ilya Repin. He gave instructions such as “give the ages of the people” or “remember the clothes worn by the people” prior to viewing. These instructions asked the viewer to make a series of judgements about the scene, to remember aspects of the scene, or simply to look at it freely. The eye-tracking patterns recorded by Yarbus showed that the subjects visually interrogate the picture in a completely different way depending on what they want to get from it. Based on the evidence of his eye tracking studies, Yarbus speculated that the eyes would be attracted to areas packed with information. As Yarbus observed: “Depending on the task in which a person is engaged, ie, depending on the character of the informaton which he must obtain, the distribution of the points of fixation on an object will vary correspondingly, because different items of information are usually localized in different parts of an object.” | |||

[[File:yarbus3.png|700px]] | |||

[[File:yarbus1.jpg|190px]] | |||

[[File:yarbus2.jpg|220px]] | |||

[[File:unnamed.jpg|130px]] | |||

[[File:1_d1WOfKfTaCeCLUzdU-pH0w.jpeg|180px]] | |||

EYE TRACKING TODAY | |||

In recent years many eye tracking research seems to have been focused on human-computer interaction, measuring how the human brain processes various user interfaces, web pages, graphics and layouts etc. Some of the popular usage areas in marketing include better understanding how customers look at products on shelves, which sections of the store get more attention and finding ways to optimize those products based on the data. | |||

The standard for eye tracking today is still video based. Eye trackers today use infrared light and high definition cameras to project light onto the eye and record the direction of the cornea and pupil. Advanced algorithms are then used to calculate the position of the eye and determine exactly where it is focused. This makes it possible to measure very fast eye movements with high accuracy. The most advance eye tracking methods current used are eye tracking glasses and virtual reality (VR) headsets with integrated eye tracking. | |||

PATCHES | |||

[[:File:videoBasedeyetrack.maxpat]] | |||

[[:File:webBasedeyetrack.maxpat]] | |||

REFERENCES | |||

Alfted L. Yarbus, Eye Movement and Vision, trans. Basil Haigh, New York Plenum Press | |||

“Yarbus, eye movements, and vision” Benjamin W Tatler, Nicolas J Wade, Hoi Kwan, Boris M Velichkovsky https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3563050/ | |||

How Do We See Art: An Eye-Tracker Study https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3170918/ | |||

Tracking - Pupil Tracking https://www.notion.so/Tracking-Pupil-Tracking-2371c7334752476f86f22e7ee6ffdf47 | |||

cv.jit | Computer Vision for Jitter https://jmpelletier.com/cvjit/ | |||

EYE TRACKING WITH CV JIT CENTROIDS https://www.youtube.com/watch?v=o4F34FL8BN4&ab_channel=ProgrammingforPeople | |||

Jit.lcd tutorials by Kimberlee Swisher https://www.youtube.com/channel/UCg8PkUJrxqsYaesUwhadzfw | |||

ASSIGNMENTS | |||

[[/Week 1/]] Rotating/ jit.world/ jit.gl.gridshape | |||

[[/Week 2/]] Data Conversations | |||

[[/Week 3/]] Data Sensing | |||

[[/Week 4/]] OSC Protocol | |||

Latest revision as of 21:56, 18 November 2020

AN EYE TRACKING EXPERIMENT

This project is an eye-tracking experimentation with Max MSP. My experiment on eye tracking is inspired by historic findings of Alfred Yarbus on eye movements in the 1950s and 1960s. Yarbus’s device was a video-based system. He recorded the close-up videos of the eyes and then he edited the video frame by frame to calculate the gaze tracking data. I experimented with both video footage and web cam to track the eyeball. After targeting eyeballs using cv.jit library externals, adaptive threshold method was used to turn eye image into a white dot on black background. cv.jit is a collection of max/msp/jitter tools for computer vision applications. I used cv.jit.face externals in my in my web cam tracking patch. The most difficult part was to capture eye balls with a web cam due to the low resolution. I used jit.lcd to draw lines based on the data coming from tracking. In the first video, you see a sample of my webcam and video-based eye trackings.

In the second video, you see me looking at my room while tracking my eye movements. During the quarantine, I have stayed at my family house and spent most of my time in my childhood bedroom. I had many moments when my eyes were darting around trying to pull the answer out of my head or searching for a long term memory. Although predominantly externally triggered by the vision, eye movements also occur in cognitive activities. This pattern of eye movement is mostly related to internal thought processes and don’t generally serve visual processing. It is called ‘nonvisual eye movements’ or ‘stimulus independent eye movement’ in most of the recent studies. Why people move their eyes when they are thinking or searching for a long term memory is not clear.

Later on this page, you will find some useful information regarding the basic types of eye movements, historic findings of Alfred Yarbus and a few sentences about the eye tracking technology used today. You will also find the patches I used in this class work at the end of the page. Please see my research notes below if you even want to read more.

EYE'S MOVEMENT AND ITS ANATOMY

There are roughly 130 million photoreceptors in the human eye, only in order of a million fibers in the optic nerve carry the signal to the brain. Eye movements are categorized in many different types of motion, two of which are most commonly studied: fixations and saccades. If the eye rests on a specific location for a certain amount of time, this non-movement is classified as a fixation. Fixations are those times when our eyes essentially stop scanning about the scene, holding the central foveal vision in place so that the visual system can take detailed information about what is being looked at. The movement from one fixation to the next is called a saccade. This is the fastest eye movement, in fact, the fastest movement the body can produce, with a duration between 30 to 50 milliseconds. Due to the fast movement during a saccade, the image on the retina is of poor quality and information intake thus happens mostly during the fixation period.

INSPIRATION - Alfred Yarbus’s Eye Tracking Experiment

Alfred Yarbus was a Russian psychologist who studied eye movements in the 1950s and 1960s. His book Eye Movements and Vision stands as one of the most cited publications in the area of eye movement and vision. Yarbus recorded observers' eye movements with his homemade gaze tracking suction cap device together with a self-constructed recording device. His findings showed that when different people viewed the same painting, the patterns of eye movements were similar but not identical. He figured out that when we view a complex scene, we show repeated cycles of inspection behavior. During these cycles, the eye stops and examines the most important elements of the picture. For example, when a viewer look at a portrait image The observer eye cycles periodically through a triangle on the eyes, nose, and mouth of the pictured subject.

In his famous experiment, Yarbus asked the same individual to view the same painting seven times, each time with a different instruction before starting to view the image. Yarbus repeatedly studied one painting titled An Unexpected Visitor by the Russian painter Ilya Repin. He gave instructions such as “give the ages of the people” or “remember the clothes worn by the people” prior to viewing. These instructions asked the viewer to make a series of judgements about the scene, to remember aspects of the scene, or simply to look at it freely. The eye-tracking patterns recorded by Yarbus showed that the subjects visually interrogate the picture in a completely different way depending on what they want to get from it. Based on the evidence of his eye tracking studies, Yarbus speculated that the eyes would be attracted to areas packed with information. As Yarbus observed: “Depending on the task in which a person is engaged, ie, depending on the character of the informaton which he must obtain, the distribution of the points of fixation on an object will vary correspondingly, because different items of information are usually localized in different parts of an object.”

EYE TRACKING TODAY

In recent years many eye tracking research seems to have been focused on human-computer interaction, measuring how the human brain processes various user interfaces, web pages, graphics and layouts etc. Some of the popular usage areas in marketing include better understanding how customers look at products on shelves, which sections of the store get more attention and finding ways to optimize those products based on the data.

The standard for eye tracking today is still video based. Eye trackers today use infrared light and high definition cameras to project light onto the eye and record the direction of the cornea and pupil. Advanced algorithms are then used to calculate the position of the eye and determine exactly where it is focused. This makes it possible to measure very fast eye movements with high accuracy. The most advance eye tracking methods current used are eye tracking glasses and virtual reality (VR) headsets with integrated eye tracking.

PATCHES

File:videoBasedeyetrack.maxpat

REFERENCES

Alfted L. Yarbus, Eye Movement and Vision, trans. Basil Haigh, New York Plenum Press

“Yarbus, eye movements, and vision” Benjamin W Tatler, Nicolas J Wade, Hoi Kwan, Boris M Velichkovsky https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3563050/

How Do We See Art: An Eye-Tracker Study https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3170918/

Tracking - Pupil Tracking https://www.notion.so/Tracking-Pupil-Tracking-2371c7334752476f86f22e7ee6ffdf47

cv.jit | Computer Vision for Jitter https://jmpelletier.com/cvjit/

EYE TRACKING WITH CV JIT CENTROIDS https://www.youtube.com/watch?v=o4F34FL8BN4&ab_channel=ProgrammingforPeople

Jit.lcd tutorials by Kimberlee Swisher https://www.youtube.com/channel/UCg8PkUJrxqsYaesUwhadzfw

ASSIGNMENTS

Week 1 Rotating/ jit.world/ jit.gl.gridshape

Week 2 Data Conversations

Week 3 Data Sensing

Week 4 OSC Protocol