No edit summary |

No edit summary |

||

| (39 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

1 FEBRUARY 2021 | |||

Final patch: | |||

[[:File:210201_ultrasonic_drawing.maxpat]] | |||

'''Project Summary''' | |||

''Sounding Landshapes'' – digital drawings of objects as sensed with sound. Data collected by scanning objects repeatedly over time with a self-devised ultrasonic sensor device. | |||

Sounding is traditionally a technique by which a boat, outfitted with a sonar device, floats atop the surface of a body of water and measures its depths. Meanwhile, sonography is a method that uses sound to read and image surfaces, most commonly in medicine and geology. In this work, I am interested in sounding as the action and sonography in the sense of its literal etymological meaning “drawing with sound.” | |||

I have chosen sound over other higher-precision options, such as lasers, because I am interested in sound as a specifically Earth-bound medium – sound cannot travel through the void of outer space, so it is a particularly Earthly medium; it is explicitly terrestrial. This interests me when trying to (re)connect to the Earth through artistic practice, using sensors beyond my own body’s senses. | |||

After having made a series of 2D hypothetical sounding studies using ink on paper, I have built my own self-devised sonar tool in the hope of extending the hypothetical 2D sounding studies of my drawings into the 3D space of the “real” world. For the Max/MSP aspect of the project, I mounted the ultrasonic sensor device on my hand and passed it over objects at a steady pace in a level line, so I could still make sense of the data while also having more freedom for imperfection and gesture over a duration of time. So, to take readings, I move my hand back and forth like a scanner over the surface of whatever object I put on the ground. The sensor obtains a reading by emitting regular ultrasonic clicks that hit the surface of the object and then bounce back to the device. The data collected during this process is sent into Max/MSP and used to reconstitute the form of the object in digital space (via a jit.lcd object). The resulting image is the object as seen through sound. Below is my process over several months to develop a Max/MSP patch to this end, along with the resulting images. | |||

25 JANUARY 2021 | |||

With Miga's help I was finally able to offset the lines of the digital drawings so that they layer in a more interesting way. I've uploaded my updated patch here, to which I've added some notes so it's easier for everyone to access: | |||

[[:File:210125_ultrasonic_drawing.maxpat]] | |||

I've also made a video screen capture, so you can see the patch in action: | |||

[https://vimeo.com/504393675 Jit.lcd drawing with ultrasonic sensor input] | |||

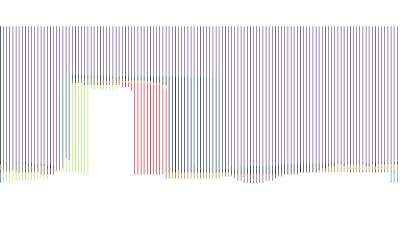

A new question has arisen: How do I make it so that every time the data input restarts (after having been stopped), it restarts at the far left of the window rather than in the middle? You can see in my video that when I restart the data input, the lines start from the middle of the window, not the far left. This makes things too messy when I want to start a fresh image. | |||

<gallery> | |||

File:Screen Shot 2021-01-27 at 3.43.57 PM.png | |||

</gallery> | |||

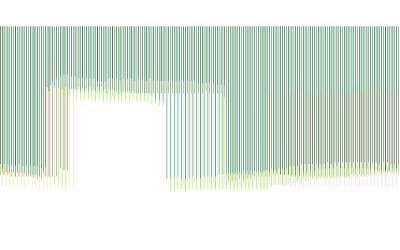

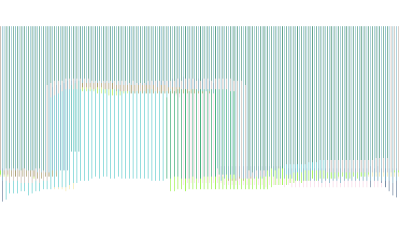

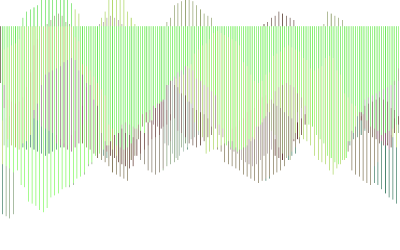

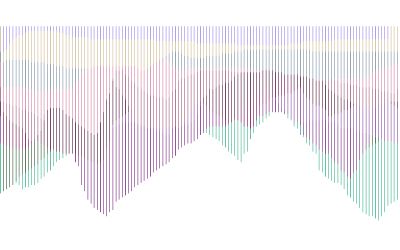

Below are my most recent ultrasonic scans of a stool. | |||

[[File:Screen Shot 2021-01-20 at 23.49.23.png|400px]] | |||

[[File:Screen Shot 2021-01-20 at 23.51.14.png|400px]] | |||

[[File:Screen Shot 2021-01-20 at 23.54.48.png|400px]] | |||

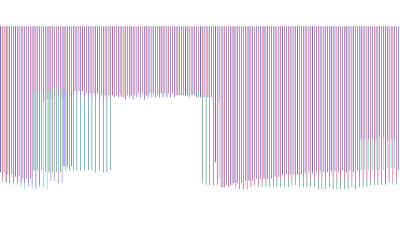

Here are two more free-hand versions: | |||

[[File:Screen Shot 2021-01-20 at 22.18.56.png|400px]] | |||

[[File:Screen Shot 2021-01-25 at 15.10.24.png|400px]] | |||

18 JANUARY 2021 | |||

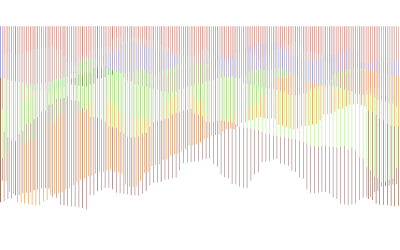

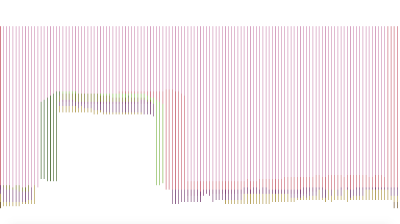

Just for fun, now that I've figured out fullscreen mode, here's a screenshot of the visualization without the fade, so the mappings simply layer on top of each other. Now you see that it could be more aesthetically interesting to have the lines offset so the next layer doesn't always erase the last (although I do like the idea of keeping a palimpsestic element). | |||

[[File:Screen Shot 2021-01-18 at 17.15.43.png|400px]] | |||

And two more of sounding a stool free-hand: | |||

[[File:Screen Shot 2021-01-19 at 16.38.29.png|400px]] | |||

[[File:Screen Shot 2021-01-19 at 16.45.11.png|400px]] | |||

16 JANUARY 2021 | |||

Just FYI, I figured out how to output my jit.lcd visual to fullscreen via a jit.window. Here is the link to the tutorial I used: https://docs.cycling74.com/max5/tutorials/jit-tut/jitterchapter38.html | |||

And here is my updated project patch: | |||

[[:File:210116_ultrasonic_drawing_with_fullscreen.maxpat]] | |||

15 JANUARY 2021 | |||

The starting point of my counter is also recognizable as the starting point of my line segments moving from left to right. In order to shift this starting point over by one pixel every other round, so that the layering lines are offset, I had the idea to define an if/then statement by even and odd numbers (that is, integers that are divisible by 2 versus those that are not), assign them the numbers 1 and 2, and then select 1 for a certain operation and 2 for another. The patch successfully gives off respective bangs for evens and odds, and even appears to apply the addition object when triggered, but the addition of +1 and -1 at the bottom still doesn't work when applied to my bigger project patch, and I don't know why yet. | |||

Here is the smaller patch focused on selecting evens and odds: | |||

[[:File:210115_odd&even.maxpat]] | |||

And here is my current main patch (using the number slider rather than my sensor data, so you can use it without the sensor), where the line does not appear to be offset every other round as I intended. Maybe it's simply not connected up properly? | |||

[[:File:210115_ultrasonic_drawing.maxpat]] | |||

Regarding my main patch above, I have one additional small question. For the object "jit.op @op + @val 1" (which you can see on the left), I would like to slow down how often the value is added (like maybe every 4th bang rather than every bang?), if that's the right way to think about it? That way the lines wouldn't fade as quickly in the image. The goal is to have more layering than is happening now. Any suggestions? | |||

14 JANUARY 2021 | |||

Better late than never! Here are two screen recordings of my interactions with my patch, to make it easier to troubleshoot: | |||

<gallery> | |||

File:210114_patch_screen_recording_1_lores.mov | |||

File:210114_patch_screen_recording_2_lores.mov | |||

File:Screen Shot 2021-01-15 at 2.59.19 PM.png | |||

File:Screen Shot 2021-01-15 at 3.20.29 PM.png | |||

</gallery> | |||

2 JANUARY 2021 (Happy New Year!) | |||

Before Christmas, I spent some time developing my patch. After a lot of trial and error, somehow I was still only able to get the number slider to work to generate the image, and not the live data coming in from my sensor (even though the numbers were printing in the console just fine). So, that's something I need help debugging. I also have very specific (hopefully straightforward!) questions that would make sense to discuss in a one-on-one meeting. | |||

Christmas patch: | |||

[[:File:Dec_21_ultrasonic_drawing.maxpat]] | |||

14 DECEMBER 2020 | |||

I've rewatched the YouTube tutorials I found, and I've tried to simplify and adapt the steps to suit my drawing needs. However, I still can't quite get it to work. I want the patch to draw vertical line segments, one at a time, plotted from left to right, and at regular time intervals as data from my ultrasonic sensor comes in. In the end, I would want it to look more or less like my drawings below (see 9 December). | |||

Here's the patch that still needs troubleshooting: | |||

*[[:File:drawing_test_line_4.maxpat]] | |||

*[[:File:Drawing_test_line-m2.maxpat]] | |||

<gallery> | |||

File:Screen Shot 2020-12-16 at 6.06.41 PM.png | |||

File:Screen Shot 2020-12-16 at 6.03.17 PM.png | |||

File:Screen Shot 2020-12-16 at 6.10.02 PM.png | |||

File:Screen Shot 2020-12-16 at 6.16.45 PM.png | |||

</gallery> | |||

9 DECEMBER 2020 | |||

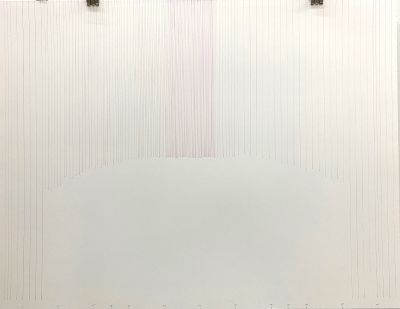

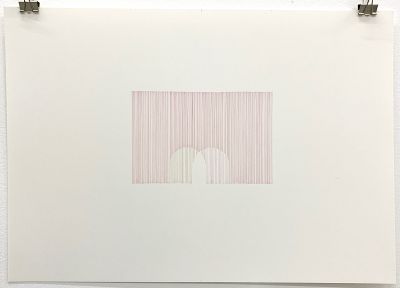

Here's an analogue drawing I'm making using the data from my ultrasonic sensor. It takes a long time with a pen and ruler! So Max/MSP is a good solution. Here's a low-quality photo of the drawing: | |||

[[File:drawing_8407.jpeg|400px]] | |||

And here is another drawing, ''Sounding Landshapes (Sphere) – two hypothetical readings showing movement of a sphere over time'': | |||

[[File:drawing_8408.jpeg|400px]] | |||

[[File:drawing_8410.jpeg|400px]] | |||

4 DECEMBER 2020 | |||

I searched around the internet for some tutorials on how to make animated drawings, and I found a really good series focused on jit.lcd. However, I still need to find out how to draw lines in a non-randomized way using my data from the ultrasonic sensor. I haven't been able to find the answer via google, I think because my question is too specific and I have a pretty clear way that I want it to look. So, feedback on how to change the objects and messages to that end would be much appreciated! | |||

*Here is my patch: [[:File:drawing_test_oval.maxpat]] | |||

*Does this help? [[:File:Drawing_test_line.maxpat]] | |||

And here are the four tutorials that taught me how to build the patch: | |||

Part 1: https://www.youtube.com/watch?v=QH6eAg2_2vU | |||

Part 2: https://www.youtube.com/watch?v=5qI2CZPWr1c&t=315s | |||

Part 3: https://www.youtube.com/watch?v=nKDP-Yo-Muk | |||

Part 4: https://www.youtube.com/watch?v=S4jsH6JyHSY&t=15s | |||

30 NOVEMBER 2020 | |||

Here are relevant artworks that I feel could be inspiring for this class: | Here are relevant artworks that I feel could be inspiring for this class: | ||

| Line 9: | Line 167: | ||

Taavi Suisalu, <em>Distant Self-Portrait</em>, 2016 | Taavi Suisalu, <em>Distant Self-Portrait</em>, 2016 | ||

https://taavisuisalu.xyz/@/distant-self-portrait/ | https://taavisuisalu.xyz/@/distant-self-portrait/ | ||

Latest revision as of 15:55, 1 February 2021

1 FEBRUARY 2021

Final patch:

File:210201_ultrasonic_drawing.maxpat

Project Summary

Sounding Landshapes – digital drawings of objects as sensed with sound. Data collected by scanning objects repeatedly over time with a self-devised ultrasonic sensor device.

Sounding is traditionally a technique by which a boat, outfitted with a sonar device, floats atop the surface of a body of water and measures its depths. Meanwhile, sonography is a method that uses sound to read and image surfaces, most commonly in medicine and geology. In this work, I am interested in sounding as the action and sonography in the sense of its literal etymological meaning “drawing with sound.”

I have chosen sound over other higher-precision options, such as lasers, because I am interested in sound as a specifically Earth-bound medium – sound cannot travel through the void of outer space, so it is a particularly Earthly medium; it is explicitly terrestrial. This interests me when trying to (re)connect to the Earth through artistic practice, using sensors beyond my own body’s senses.

After having made a series of 2D hypothetical sounding studies using ink on paper, I have built my own self-devised sonar tool in the hope of extending the hypothetical 2D sounding studies of my drawings into the 3D space of the “real” world. For the Max/MSP aspect of the project, I mounted the ultrasonic sensor device on my hand and passed it over objects at a steady pace in a level line, so I could still make sense of the data while also having more freedom for imperfection and gesture over a duration of time. So, to take readings, I move my hand back and forth like a scanner over the surface of whatever object I put on the ground. The sensor obtains a reading by emitting regular ultrasonic clicks that hit the surface of the object and then bounce back to the device. The data collected during this process is sent into Max/MSP and used to reconstitute the form of the object in digital space (via a jit.lcd object). The resulting image is the object as seen through sound. Below is my process over several months to develop a Max/MSP patch to this end, along with the resulting images.

25 JANUARY 2021

With Miga's help I was finally able to offset the lines of the digital drawings so that they layer in a more interesting way. I've uploaded my updated patch here, to which I've added some notes so it's easier for everyone to access:

File:210125_ultrasonic_drawing.maxpat

I've also made a video screen capture, so you can see the patch in action:

Jit.lcd drawing with ultrasonic sensor input

A new question has arisen: How do I make it so that every time the data input restarts (after having been stopped), it restarts at the far left of the window rather than in the middle? You can see in my video that when I restart the data input, the lines start from the middle of the window, not the far left. This makes things too messy when I want to start a fresh image.

Below are my most recent ultrasonic scans of a stool.

Here are two more free-hand versions:

18 JANUARY 2021

Just for fun, now that I've figured out fullscreen mode, here's a screenshot of the visualization without the fade, so the mappings simply layer on top of each other. Now you see that it could be more aesthetically interesting to have the lines offset so the next layer doesn't always erase the last (although I do like the idea of keeping a palimpsestic element).

And two more of sounding a stool free-hand:

16 JANUARY 2021

Just FYI, I figured out how to output my jit.lcd visual to fullscreen via a jit.window. Here is the link to the tutorial I used: https://docs.cycling74.com/max5/tutorials/jit-tut/jitterchapter38.html

And here is my updated project patch:

File:210116_ultrasonic_drawing_with_fullscreen.maxpat

15 JANUARY 2021

The starting point of my counter is also recognizable as the starting point of my line segments moving from left to right. In order to shift this starting point over by one pixel every other round, so that the layering lines are offset, I had the idea to define an if/then statement by even and odd numbers (that is, integers that are divisible by 2 versus those that are not), assign them the numbers 1 and 2, and then select 1 for a certain operation and 2 for another. The patch successfully gives off respective bangs for evens and odds, and even appears to apply the addition object when triggered, but the addition of +1 and -1 at the bottom still doesn't work when applied to my bigger project patch, and I don't know why yet.

Here is the smaller patch focused on selecting evens and odds:

And here is my current main patch (using the number slider rather than my sensor data, so you can use it without the sensor), where the line does not appear to be offset every other round as I intended. Maybe it's simply not connected up properly?

File:210115_ultrasonic_drawing.maxpat

Regarding my main patch above, I have one additional small question. For the object "jit.op @op + @val 1" (which you can see on the left), I would like to slow down how often the value is added (like maybe every 4th bang rather than every bang?), if that's the right way to think about it? That way the lines wouldn't fade as quickly in the image. The goal is to have more layering than is happening now. Any suggestions?

14 JANUARY 2021

Better late than never! Here are two screen recordings of my interactions with my patch, to make it easier to troubleshoot:

2 JANUARY 2021 (Happy New Year!)

Before Christmas, I spent some time developing my patch. After a lot of trial and error, somehow I was still only able to get the number slider to work to generate the image, and not the live data coming in from my sensor (even though the numbers were printing in the console just fine). So, that's something I need help debugging. I also have very specific (hopefully straightforward!) questions that would make sense to discuss in a one-on-one meeting.

Christmas patch: File:Dec_21_ultrasonic_drawing.maxpat

14 DECEMBER 2020

I've rewatched the YouTube tutorials I found, and I've tried to simplify and adapt the steps to suit my drawing needs. However, I still can't quite get it to work. I want the patch to draw vertical line segments, one at a time, plotted from left to right, and at regular time intervals as data from my ultrasonic sensor comes in. In the end, I would want it to look more or less like my drawings below (see 9 December).

Here's the patch that still needs troubleshooting:

9 DECEMBER 2020

Here's an analogue drawing I'm making using the data from my ultrasonic sensor. It takes a long time with a pen and ruler! So Max/MSP is a good solution. Here's a low-quality photo of the drawing:

And here is another drawing, Sounding Landshapes (Sphere) – two hypothetical readings showing movement of a sphere over time:

4 DECEMBER 2020

I searched around the internet for some tutorials on how to make animated drawings, and I found a really good series focused on jit.lcd. However, I still need to find out how to draw lines in a non-randomized way using my data from the ultrasonic sensor. I haven't been able to find the answer via google, I think because my question is too specific and I have a pretty clear way that I want it to look. So, feedback on how to change the objects and messages to that end would be much appreciated!

- Here is my patch: File:drawing_test_oval.maxpat

- Does this help? File:Drawing_test_line.maxpat

And here are the four tutorials that taught me how to build the patch:

Part 1: https://www.youtube.com/watch?v=QH6eAg2_2vU

Part 2: https://www.youtube.com/watch?v=5qI2CZPWr1c&t=315s

Part 3: https://www.youtube.com/watch?v=nKDP-Yo-Muk

Part 4: https://www.youtube.com/watch?v=S4jsH6JyHSY&t=15s

30 NOVEMBER 2020

Here are relevant artworks that I feel could be inspiring for this class:

Timo Arnall, Immaterials: Light painting WiFi, 2011 https://vimeo.com/20412632

Katie Paterson, As the World Turns, 2010 http://katiepaterson.org/portfolio/as-the-world-turns/

Taavi Suisalu, Distant Self-Portrait, 2016 https://taavisuisalu.xyz/@/distant-self-portrait/

File:Liz_Screen Recording 2020-11-28 at 16.07.43.mov

File:test_analogue-to-digital-to-analogue.maxpat

Here's a patch-in-progress, now that I was successfully able to get my ultrasonic sensor readings to appear in the Max console. I'm also including a screen recording of the Max print feed, so you can see what's going on – I see that the console is breaking up the lines of data into smaller pieces (which I can imagine is simple enough to fix). For example, in Max/MSP, the word "distance" is broken up between several lines, and so are the numerical figures. Here is an example of how the same data shows up in the Arduino serial plotter. Just a note, I have included the word "distance" to also be printed, just to reduce confusion about values.

15:41:05.887 -> Distance: 68.54

15:41:05.958 -> Distance: 68.54

15:41:06.063 -> Distance: 50.18

15:41:06.171 -> Distance: 50.17

15:41:06.278 -> Distance: 50.98

15:41:06.387 -> Distance: 51.39

15:41:06.459 -> Distance: 51.37

15:41:06.563 -> Distance: 50.93

15:41:06.667 -> Distance: 50.52

15:41:06.769 -> Distance: 50.52

Now that I'm thinking more of the aesthetic exploration, I would be interested to have this data converted into a digital drawing that changes over time, so I suppose that would be a video or animation. I'm particularly interested in how a drawn line in digital space can be infinitely thin (unlike a pencil line, which is defined by the material), and so I could imagine playing with what are known as "space-filling curves" that approach infinity – for example, Peano curves or Hilbert curves. Here's the wikipedia page for an overview of what's behind them: