Chrzzldzzl (talk | contribs) No edit summary |

Chrzzldzzl (talk | contribs) |

||

| Line 2: | Line 2: | ||

{{#ev:youtube|JJfIA9CpOeA}} | {{#ev:youtube|JJfIA9CpOeA}} | ||

I made a supercut out of musicvideos of the Top10-SingleCharts from my birthday. The scenes comprise footage in which several instruments are "showcased" visually. I assumed that those scenes would indicate important moments of the referenced composition, like "hooks", solos or other instrumental parts. | I made a supercut out of musicvideos of the Top10-SingleCharts from my birthday. The scenes comprise footage in which several instruments are "showcased" visually. I assumed that those scenes would indicate important moments of the referenced composition, like "hooks", solos or other instrumental parts. The scenes and corresponding musical snippets are located at the exact same time where they occur in their originals. Thus the supercut yields a "meta song". | ||

In order to achieve this I trained the yolov5-object-detection-algorithm [https://pytorch.org/hub/ultralytics_yolov5/] with a custom dataset of images of Guitars, Drums, Keyboards, Microphones and Saxophones using fifty one and open images [https://voxel51.com/docs/fiftyone/tutorials/open_images.html] | In order to achieve this I trained the yolov5-object-detection-algorithm [https://pytorch.org/hub/ultralytics_yolov5/] with a custom dataset of images of Guitars, Drums, Keyboards, Microphones and Saxophones using fifty one and open images [https://voxel51.com/docs/fiftyone/tutorials/open_images.html] | ||

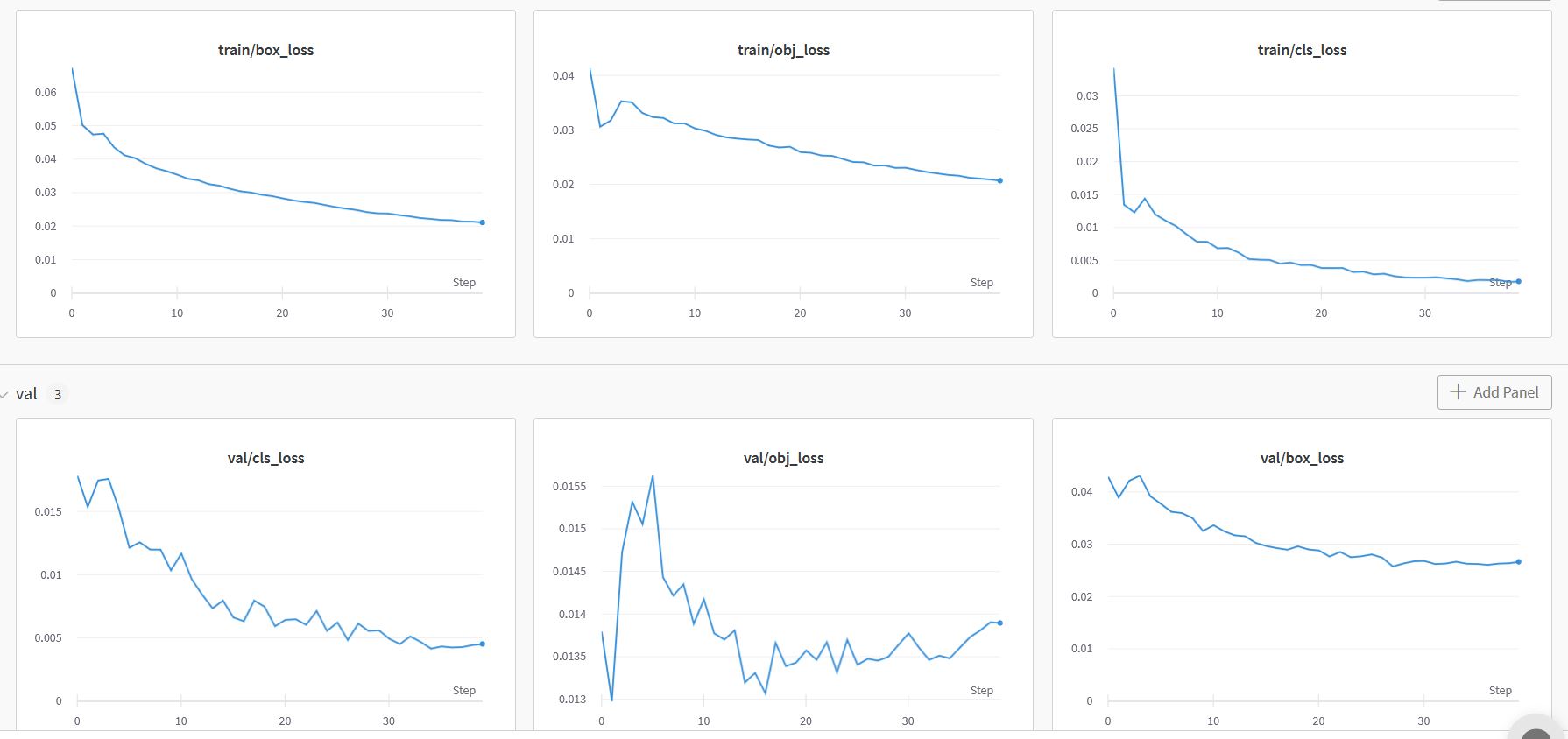

[[File:training.jpg]] | [[File:training.jpg|caption]] ''Graphs displaying the training development on training and validation data set over 40 epochs. The training took about 6 hours.'' | ||

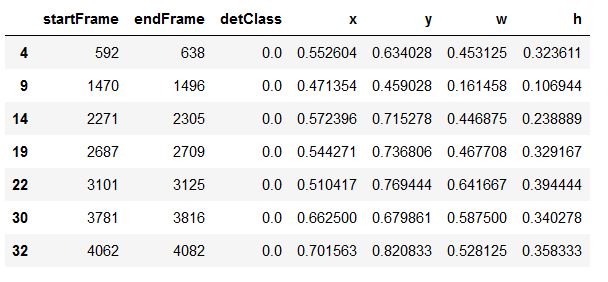

I cleaned and prepared the resulting detection data using Pandas. | I cleaned and prepared the resulting detection data using Pandas. | ||

[[File:pandas.jpg]] | |||

[[File:pandas.jpg|caption]] ''exemplary cleaned and prepared detection data set'' | |||

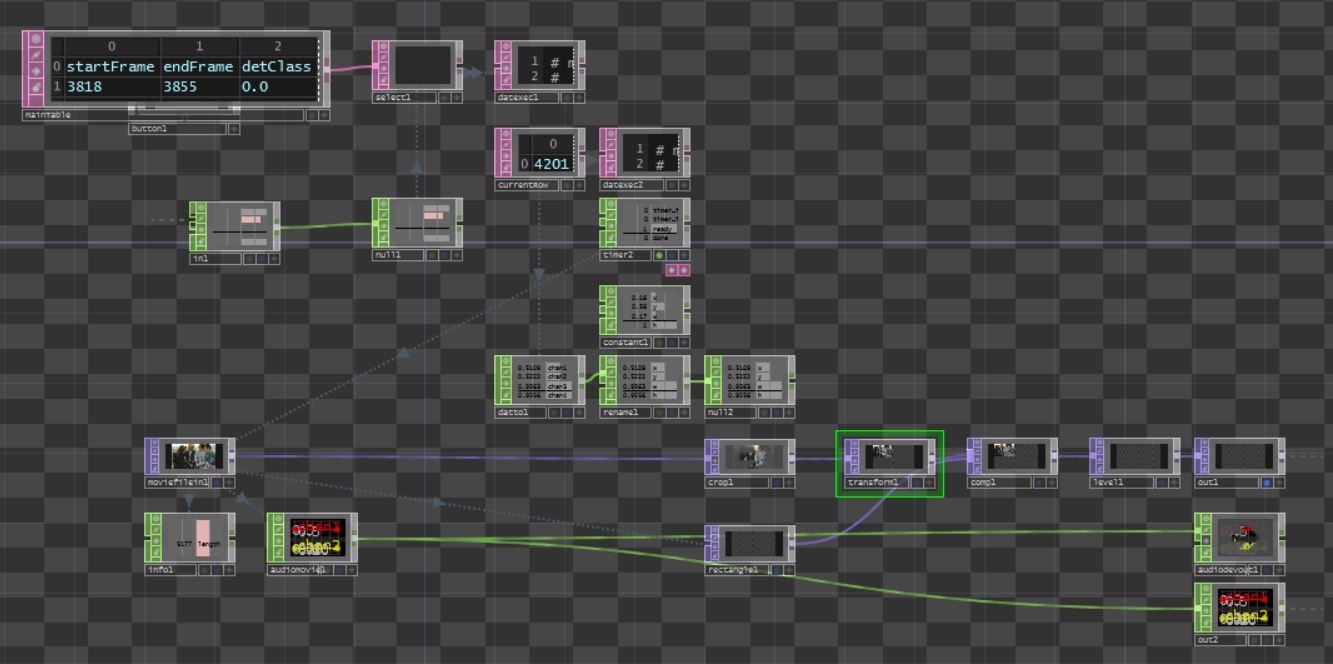

Finally I created a TouchDesigner-patch doing the cutting based on the detection data: | |||

[[File:td.jpg|caption]] ''excerpt of TouchDesigner-patch'' | |||

There are many ways to improve the project: | |||

First, the training should be repeated using more labeled images. Until now I used about 1000 images per object class. Even more important would be to introduce background data without any labeled objects to the training data set. The current model detects objects where in fact aren't any. Especially "musical keyboard" responses to a lot of nonsense. In general a deeper understanding of what it is that I was actually doing would be helpful. | |||

Second, The data preperation and cleaning could be done more meticulously. In order to simplify the process I ignored cases in which more than one object per frame was detected. I would need to figure out how to deal with a) different classes are detected in one frame and b) several instances of the same object are detected in the same frame. | |||

Third, The cutting and editing in TouchDesigner was provisional. Maybe I should consider achieving the same using ffmpeg or something similar, since it is not really necessary to operate in realtime. | |||

Fourth, I should try to increase the amount of videos from top10 to maybe top100 to investigate how the effect of it changes. The current version contains long breaks and "wrong" scenes (which don't contain any instrument) which makes it hard to even understand the supertcut approach at all. | |||

Fifth, I should reconsider my choice of detection classes. Or more in general substantiate the motivation of my supercut. Which are typical tropes and ideological references in music videos of 1990 that I want to draw the viewer/listener attention to? | |||

Sixth, are there other ways of contextualizing the supercut like composition or installation? | |||

==The Essential Mathias Reim== | ==The Essential Mathias Reim== | ||

{{#ev:youtube|yGH6nylXflE}} | {{#ev:youtube|yGH6nylXflE}} | ||

MR's hit single from 1990 in a nutshell: "ich", a decent amount of "nicht" paired with a tiny dash of "dich". | MR's hit single from 1990 in a nutshell: "ich", a decent amount of "nicht" paired with a tiny dash of "dich". | ||

Latest revision as of 21:58, 4 March 2022

Happy Birthday

I made a supercut out of musicvideos of the Top10-SingleCharts from my birthday. The scenes comprise footage in which several instruments are "showcased" visually. I assumed that those scenes would indicate important moments of the referenced composition, like "hooks", solos or other instrumental parts. The scenes and corresponding musical snippets are located at the exact same time where they occur in their originals. Thus the supercut yields a "meta song".

In order to achieve this I trained the yolov5-object-detection-algorithm [1] with a custom dataset of images of Guitars, Drums, Keyboards, Microphones and Saxophones using fifty one and open images [2]

Graphs displaying the training development on training and validation data set over 40 epochs. The training took about 6 hours.

Graphs displaying the training development on training and validation data set over 40 epochs. The training took about 6 hours.

I cleaned and prepared the resulting detection data using Pandas.

exemplary cleaned and prepared detection data set

exemplary cleaned and prepared detection data set

Finally I created a TouchDesigner-patch doing the cutting based on the detection data:

excerpt of TouchDesigner-patch

excerpt of TouchDesigner-patch

There are many ways to improve the project: First, the training should be repeated using more labeled images. Until now I used about 1000 images per object class. Even more important would be to introduce background data without any labeled objects to the training data set. The current model detects objects where in fact aren't any. Especially "musical keyboard" responses to a lot of nonsense. In general a deeper understanding of what it is that I was actually doing would be helpful. Second, The data preperation and cleaning could be done more meticulously. In order to simplify the process I ignored cases in which more than one object per frame was detected. I would need to figure out how to deal with a) different classes are detected in one frame and b) several instances of the same object are detected in the same frame. Third, The cutting and editing in TouchDesigner was provisional. Maybe I should consider achieving the same using ffmpeg or something similar, since it is not really necessary to operate in realtime. Fourth, I should try to increase the amount of videos from top10 to maybe top100 to investigate how the effect of it changes. The current version contains long breaks and "wrong" scenes (which don't contain any instrument) which makes it hard to even understand the supertcut approach at all. Fifth, I should reconsider my choice of detection classes. Or more in general substantiate the motivation of my supercut. Which are typical tropes and ideological references in music videos of 1990 that I want to draw the viewer/listener attention to? Sixth, are there other ways of contextualizing the supercut like composition or installation?

The Essential Mathias Reim

MR's hit single from 1990 in a nutshell: "ich", a decent amount of "nicht" paired with a tiny dash of "dich".