Pedroramos (talk | contribs) (→Live) |

(→Jitter) |

||

| (17 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

=Creating an AV Concert with Max/MSP/Jitter= | =Creating an AV Concert with Max/MSP/Jitter= | ||

[[File:AVkonzert_video_2.mp4 |700px]] | |||

The project developed with the support of the module consisted of an environment used for real-time audiovisual manipulation on Live Music, which is completely developed on Max/MSP/Jitter. | The project developed with the support of the module consisted of an environment used for real-time audiovisual manipulation on Live Music, which is completely developed on Max/MSP/Jitter. | ||

| Line 8: | Line 8: | ||

==Jitter== | ==Jitter== | ||

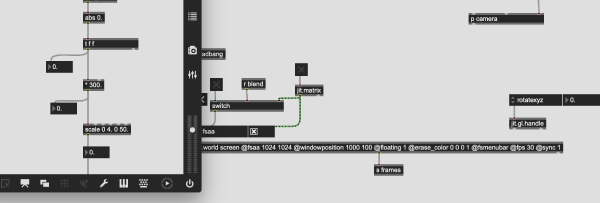

[[File:jitterAV.png|600px]] | |||

The patch developed in Max/MSP/Jitter, taking into consideration the flexibility of parameter adjustments needed for its live manipulation, as well as relatively simpleness intended to make eventual live troubleshooting and efficiency, were two of the main aspects while developing the patch. Considering it, some specific aims were addressed from the beginning of the patching, simultaneously with an understanding of general functioning of Jitter and some of the main objects used for it. | The patch developed in Max/MSP/Jitter, taking into consideration the flexibility of parameter adjustments needed for its live manipulation, as well as relatively simpleness intended to make eventual live troubleshooting and efficiency, were two of the main aspects while developing the patch. Considering it, some specific aims were addressed from the beginning of the patching, simultaneously with an understanding of general functioning of Jitter and some of the main objects used for it. | ||

That said, the first essential aim was resolving the audio reactivity from a sound source into its respective visual modulation, which was then adjustment by the defined parameters in each case - such as scale, position, and so forth. Considering the possibility of working with more than one video channel, one of the first aspects took into consideration was also the possibility of working simultaneously with two different video outputs, which could be either manipulated from the same or different audio sources. | That said, the first essential aim was resolving the audio reactivity from a sound source into its respective visual modulation, which was then adjustment by the defined parameters in each case - such as scale, position, and so forth. Considering the possibility of working with more than one video channel, one of the first aspects took into consideration was also the possibility of working simultaneously with two different video outputs, which could be either manipulated from the same or different audio sources. | ||

The paths available on the documentation register the process of which the patch was developed to resolve its intended functionality. Additional experiments with visual manipulation on Jitter through sensors and Arduino also took place, and it is made important to highlight that the stage in which the project took place was one of the possibilities of such framework, but not by far the only. |

The paths available on the documentation register the process of which the patch was developed to resolve its intended functionality. Additional experiments with visual manipulation on Jitter through sensors and Arduino also took place, and it is made important to highlight that the stage in which the project took place was one of the possibilities of such framework, but not by far the only. | ||

==Live== | ==Live== | ||

<gallery> | |||

File: AVkonzert_flyer.jpeg | |||

File:AVkonzert_1.jpeg | |||

</gallery> | |||

The concert, which took place at the Other Music Academy (OMA) - an active venue on the support of local artists and concerts of artists from outside of Weimar - on 16.01.2020, and happened in collaboration with Brazilian producer and composer João Mansur, who is based in Portugal. | The concert, which took place at the Other Music Academy (OMA) - an active venue on the support of local artists and concerts of artists from outside of Weimar - on 16.01.2020, and happened in collaboration with Brazilian producer and composer João Mansur, who is based in Portugal. | ||

| Line 21: | Line 25: | ||

For its realisation, months of preparation took place in parallel with the artistic and technical setup of the system described. Such preparations consisted either on management setup with the venue, technical setup for the realisation of the concert regarding the audio requirements and available structure of the venue, as well as marketing strategies for its divulgation. In parallel, artistic conceptual preparation took place through conversations with the musician regarding the general aesthetics of the visuals in accordance to the atmosphere of the music, which consisted on the tracks on his upcoming debut solo album, Trombada Tapes, due to be released later in 2020. | For its realisation, months of preparation took place in parallel with the artistic and technical setup of the system described. Such preparations consisted either on management setup with the venue, technical setup for the realisation of the concert regarding the audio requirements and available structure of the venue, as well as marketing strategies for its divulgation. In parallel, artistic conceptual preparation took place through conversations with the musician regarding the general aesthetics of the visuals in accordance to the atmosphere of the music, which consisted on the tracks on his upcoming debut solo album, Trombada Tapes, due to be released later in 2020. | ||

[[File:AVkonzert_video_1.mp4|700px]] | |||

[ | For the concert, in addition to the jitter audio reactive visuals - that were manipulated in real time -, footage provided by the artist was also used for real time manipulation. The footage, which in part was featured on the first music video of the album, [https://www.youtube.com/watch?v=RKuynJ9MtRg Parapeito Margarina], was then used for juxtapositions, cropping, amongst other real time editing techniques. | ||

Technically, the sound used for live audio reactivity consisted on a direct input from one of the sound inputs coming from the musician. On the concert, two projectors pointed to the stage were positioned in the room. The consideration of the room in which it was taking place was an important aspect on its positioning placement, and the two projectors were projecting the same video output simultaneously. Although some of the circumstances could be foreseen, as with any live manipulating environment, some aspects of what can happen live go beyond strictly what one can know in advance of what can happen - namely eventual adaptations to technical issues, reaction of the audience, and so forth. For such case, technical preparation and adjustments of the system to the live environment and rehearsals together with the musician were essential for the concert to run smoothly and successfully. | Technically, the sound used for live audio reactivity consisted on a direct input from one of the sound inputs coming from the musician. On the concert, two projectors pointed to the stage were positioned in the room. The consideration of the room in which it was taking place was an important aspect on its positioning placement, and the two projectors were projecting the same video output simultaneously. Although some of the circumstances could be foreseen, as with any live manipulating environment, some aspects of what can happen live go beyond strictly what one can know in advance of what can happen - namely eventual adaptations to technical issues, reaction of the audience, and so forth. For such case, technical preparation and adjustments of the system to the live environment and rehearsals together with the musician were essential for the concert to run smoothly and successfully. | ||

==Final Considerations== | ==Final Considerations== | ||

| Line 33: | Line 38: | ||

For the concert in question, also due to time restrictions, a concert for 9 songs was developed with relatively simple manipulation of Graphic objects. The additional altering of colours and specific parameters of the objects, however, already opened a wide range of possibilities. As said, possibilities for increasing such system has also proven itself possible, in ways that the same concert environment might be improved - either in levels of audio control and analysis, as well as further use of graphics effects and objects. The use of Max/MSP/Jitter for such appliances can, by empirical experience, only be recommended, regardless of strong previous background in Max/MSP/Jitter. | For the concert in question, also due to time restrictions, a concert for 9 songs was developed with relatively simple manipulation of Graphic objects. The additional altering of colours and specific parameters of the objects, however, already opened a wide range of possibilities. As said, possibilities for increasing such system has also proven itself possible, in ways that the same concert environment might be improved - either in levels of audio control and analysis, as well as further use of graphics effects and objects. The use of Max/MSP/Jitter for such appliances can, by empirical experience, only be recommended, regardless of strong previous background in Max/MSP/Jitter. | ||

== Jitter | == Processual Development of Max/MSP/Jitter patches == | ||

[[Media:191107_jitter_audioreactive.maxpat |1. First Sound Reactivity Attempt]] | [[Media:191107_jitter_audioreactive.maxpat |1. First Sound Reactivity Attempt]] | ||

Latest revision as of 14:03, 27 April 2020

Creating an AV Concert with Max/MSP/Jitter

The project developed with the support of the module consisted of an environment used for real-time audiovisual manipulation on Live Music, which is completely developed on Max/MSP/Jitter.

Although the contact with Jitter happened for the first time through the Module, a previous knowledge on Max/MSP already existed due to the module My Computer, Max & I, from the SoSe 2019. This documentation will explain the steps that happened from the building of the environment, to its use on the live concert, that happened in Weimar in January 2020.

Jitter

The patch developed in Max/MSP/Jitter, taking into consideration the flexibility of parameter adjustments needed for its live manipulation, as well as relatively simpleness intended to make eventual live troubleshooting and efficiency, were two of the main aspects while developing the patch. Considering it, some specific aims were addressed from the beginning of the patching, simultaneously with an understanding of general functioning of Jitter and some of the main objects used for it.

That said, the first essential aim was resolving the audio reactivity from a sound source into its respective visual modulation, which was then adjustment by the defined parameters in each case - such as scale, position, and so forth. Considering the possibility of working with more than one video channel, one of the first aspects took into consideration was also the possibility of working simultaneously with two different video outputs, which could be either manipulated from the same or different audio sources. The paths available on the documentation register the process of which the patch was developed to resolve its intended functionality. Additional experiments with visual manipulation on Jitter through sensors and Arduino also took place, and it is made important to highlight that the stage in which the project took place was one of the possibilities of such framework, but not by far the only.

Live

The concert, which took place at the Other Music Academy (OMA) - an active venue on the support of local artists and concerts of artists from outside of Weimar - on 16.01.2020, and happened in collaboration with Brazilian producer and composer João Mansur, who is based in Portugal.

For its realisation, months of preparation took place in parallel with the artistic and technical setup of the system described. Such preparations consisted either on management setup with the venue, technical setup for the realisation of the concert regarding the audio requirements and available structure of the venue, as well as marketing strategies for its divulgation. In parallel, artistic conceptual preparation took place through conversations with the musician regarding the general aesthetics of the visuals in accordance to the atmosphere of the music, which consisted on the tracks on his upcoming debut solo album, Trombada Tapes, due to be released later in 2020.

For the concert, in addition to the jitter audio reactive visuals - that were manipulated in real time -, footage provided by the artist was also used for real time manipulation. The footage, which in part was featured on the first music video of the album, Parapeito Margarina, was then used for juxtapositions, cropping, amongst other real time editing techniques.

Technically, the sound used for live audio reactivity consisted on a direct input from one of the sound inputs coming from the musician. On the concert, two projectors pointed to the stage were positioned in the room. The consideration of the room in which it was taking place was an important aspect on its positioning placement, and the two projectors were projecting the same video output simultaneously. Although some of the circumstances could be foreseen, as with any live manipulating environment, some aspects of what can happen live go beyond strictly what one can know in advance of what can happen - namely eventual adaptations to technical issues, reaction of the audience, and so forth. For such case, technical preparation and adjustments of the system to the live environment and rehearsals together with the musician were essential for the concert to run smoothly and successfully.

Final Considerations

One of the main considerations from the learning experience on the different stages was that, although not always the go-to solution for live video manipulation for music, Jitter was proved to be an extremely flexible environment, that offers a wide range of possibilities of working with Graphical Processing and Video Manipulation. The good integration offered by Max/MSP framework on working with music also makes it easier to control different parameters of audio inputs, as well as its subsequent modulation of visual elements on Jitter.

For the concert in question, also due to time restrictions, a concert for 9 songs was developed with relatively simple manipulation of Graphic objects. The additional altering of colours and specific parameters of the objects, however, already opened a wide range of possibilities. As said, possibilities for increasing such system has also proven itself possible, in ways that the same concert environment might be improved - either in levels of audio control and analysis, as well as further use of graphics effects and objects. The use of Max/MSP/Jitter for such appliances can, by empirical experience, only be recommended, regardless of strong previous background in Max/MSP/Jitter.

Processual Development of Max/MSP/Jitter patches

1. First Sound Reactivity Attempt

2. Ongoing development of Real Time Manipulation Environment

3. Real Time manipulation with Arduino and Audio Input

4. Ongoing development for Real Time Manipulation Environment