Paulina.chw (talk | contribs) No edit summary |

Paulina.chw (talk | contribs) No edit summary |

||

| (One intermediate revision by the same user not shown) | |||

| Line 1: | Line 1: | ||

INTERACTION WITH UNITY | |||

I was very interested to create an interactive VR experience. Unlikely during the pandemic, it’s not possible to use the university rooms. I tried to develop a basic interactive installation in Unity instead. The interaction will be based on the position of the user. If the person will come closer to the sensor, the display will change according to it. I want to create a simple experience, a situation, that would be not possible in the real world. | |||

[[File:Unityosc1235 (1) (1).gif]] | |||

______________________________________________________________________________________ | |||

IMPLEMENTATION | |||

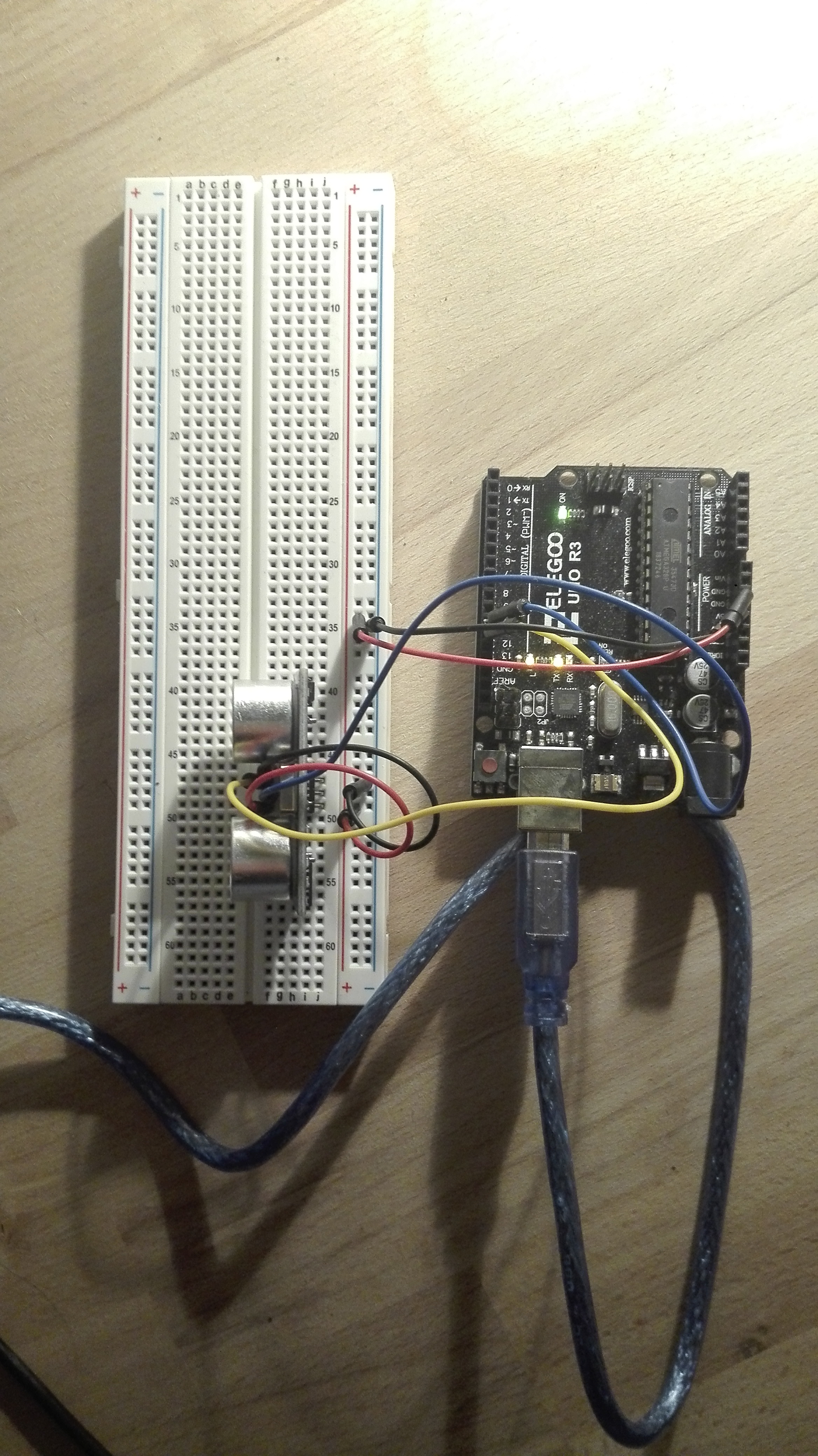

The installation is based on Arduino ultrasonic sensor, Max Msp loop, and Unity real-time rendering. I create a room with light up spheres in Unity, that is displayed on the laptop monitor. In front of the monitor, the ultrasonic sensor is installed. Each time the user approaches the screen, the installation reacts with the sound and movement of the spheres. | |||

data loop: ultrasonic sensor -> MaxMsp (sound) -> OSC protocol -> Unity (image) | |||

[[File:Board444.jpeg]] | |||

Arduino ultrasonic sensor detects the position of the user | |||

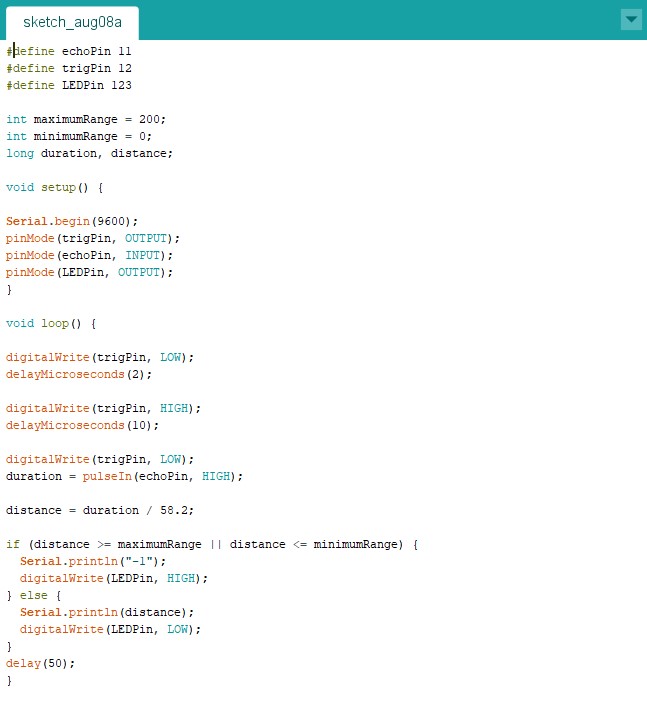

[[File: | [[File:Arduino loop1.jpeg]] | ||

Data is collected and transformed in the Arduino code | |||

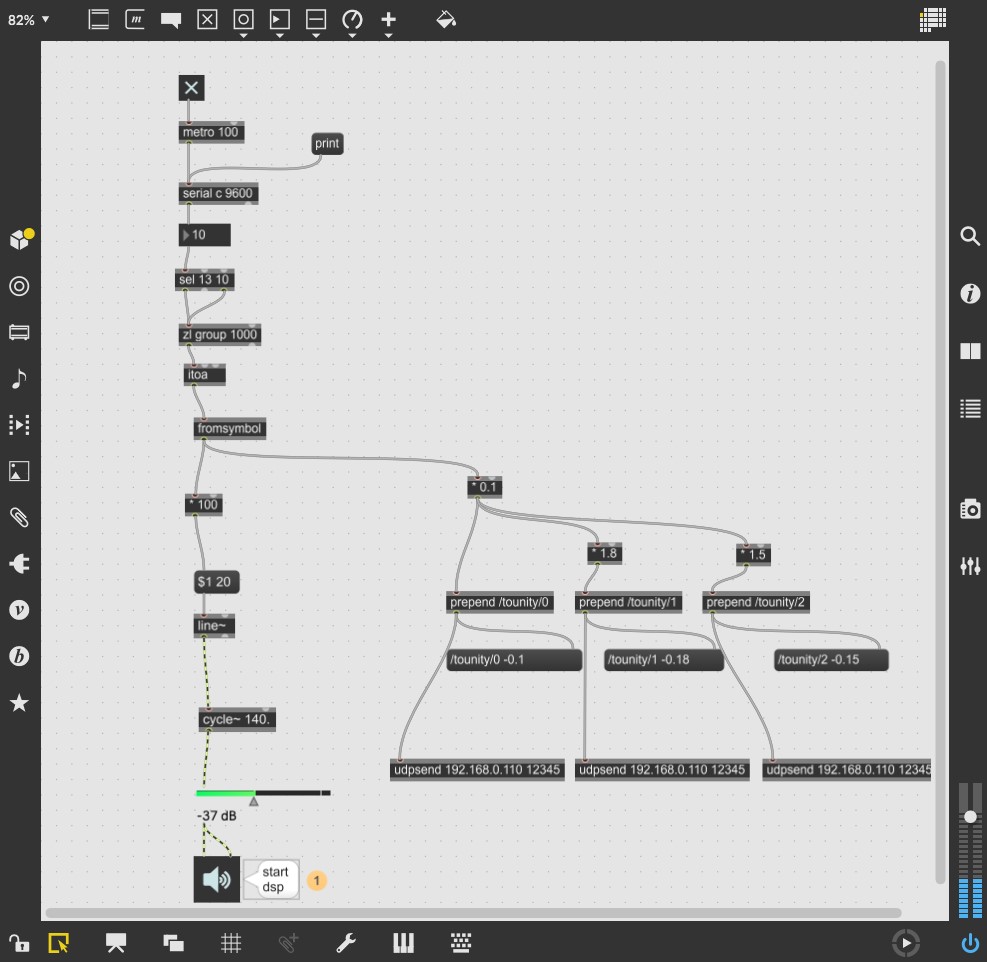

[[File:Max loop2.jpeg]] | |||

Data is received, unpacked and transformed in the Max Msp loop, creates sound according to data | |||

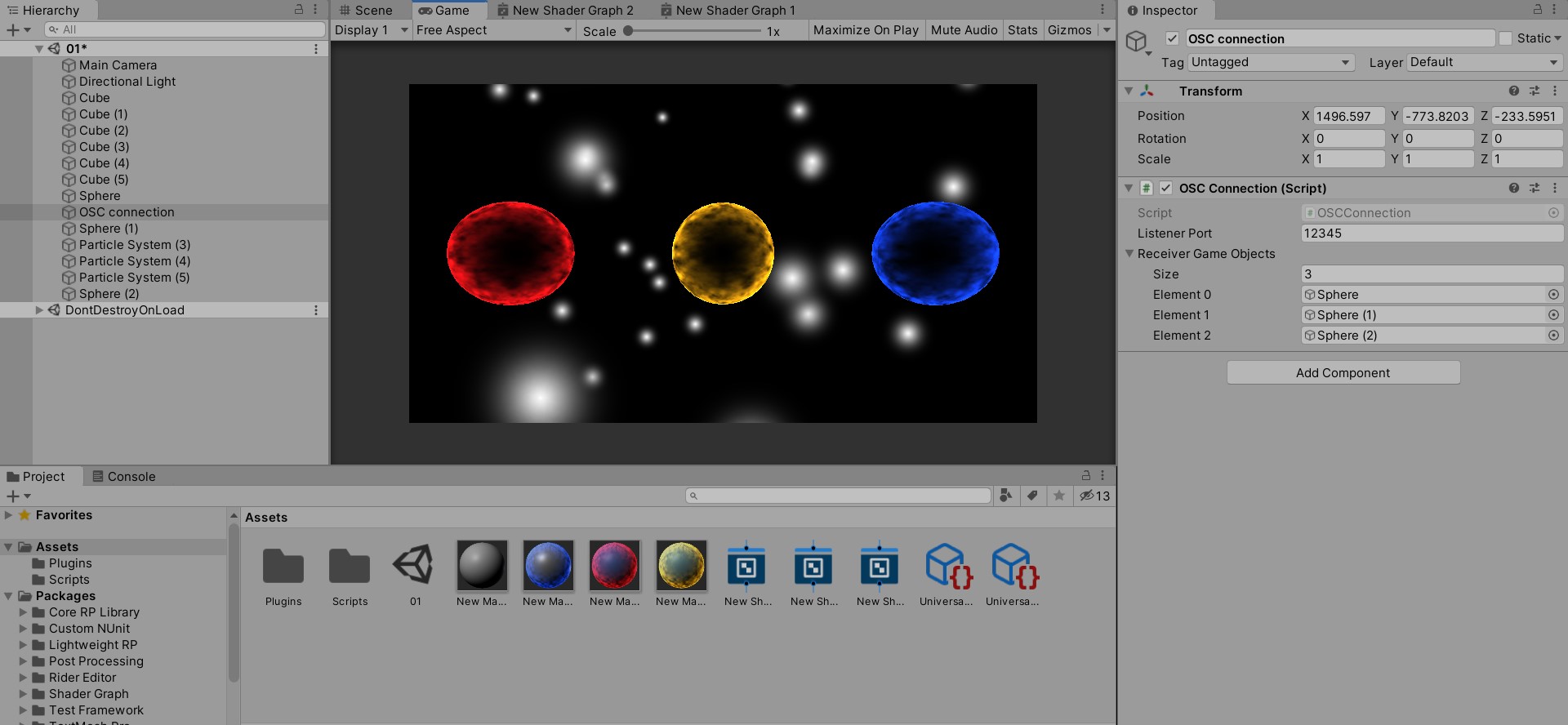

[[File:Unity33.jpeg]] | |||

Data is sent from Max Msp to Unity by the OSC protocol, external c# plugins in Unity receive the data and transform objects in the scene | |||

______________________________________________________________________________________ | |||

[[File:YouCut 20200907 193520892 Trim.mp4]] | |||

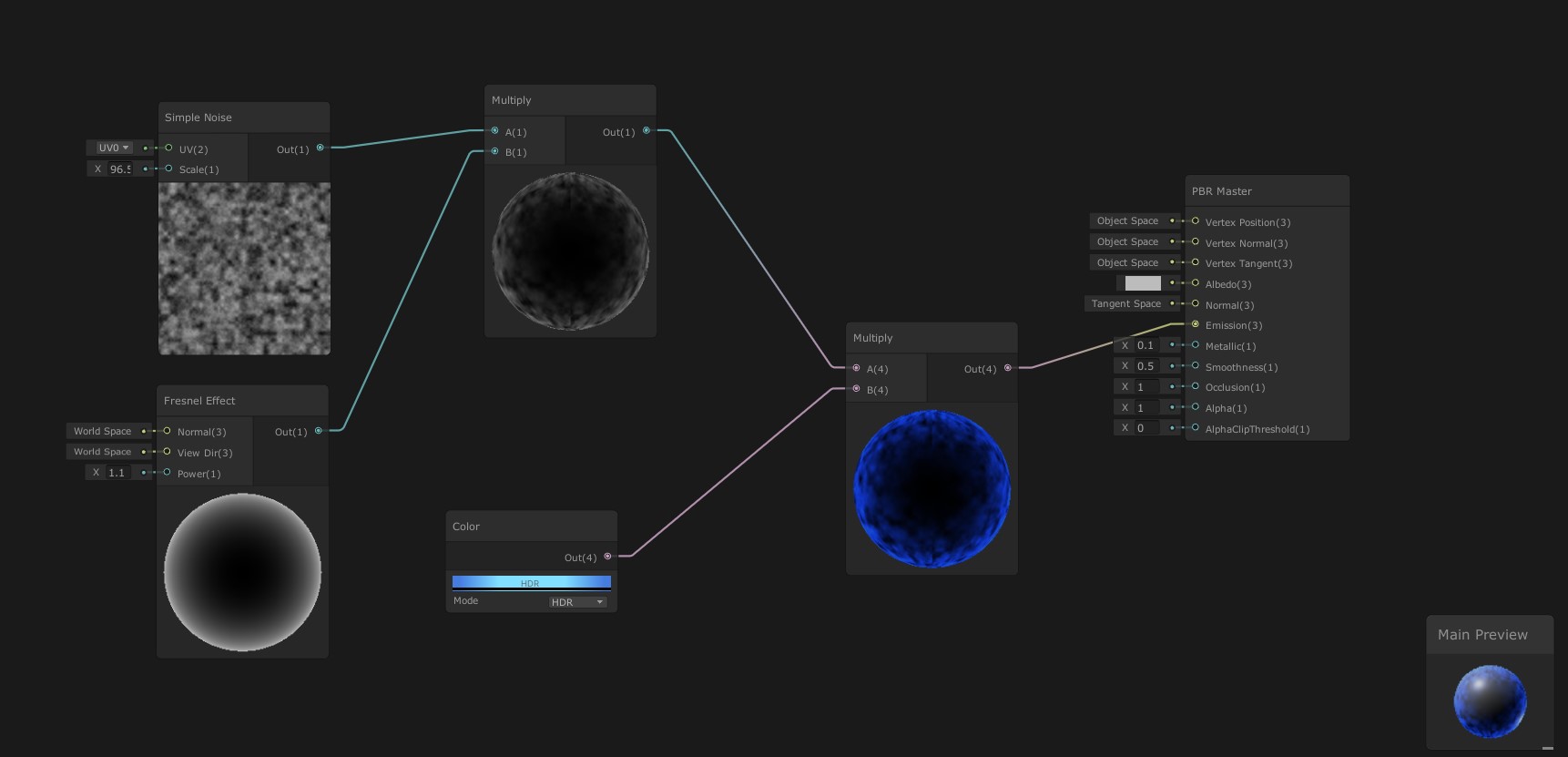

UNITY SCENE | |||

I wanted to create a dark game scene, that is lighted up by the environment. I also wanted to play with Unity modules. For that, I created a Shader Graph Material. I used also the Particle System to make glowing modules. | |||

[[File:SCHADER111.jpeg]] | |||

Latest revision as of 18:12, 7 September 2020

INTERACTION WITH UNITY

I was very interested to create an interactive VR experience. Unlikely during the pandemic, it’s not possible to use the university rooms. I tried to develop a basic interactive installation in Unity instead. The interaction will be based on the position of the user. If the person will come closer to the sensor, the display will change according to it. I want to create a simple experience, a situation, that would be not possible in the real world.

______________________________________________________________________________________

______________________________________________________________________________________

IMPLEMENTATION

The installation is based on Arduino ultrasonic sensor, Max Msp loop, and Unity real-time rendering. I create a room with light up spheres in Unity, that is displayed on the laptop monitor. In front of the monitor, the ultrasonic sensor is installed. Each time the user approaches the screen, the installation reacts with the sound and movement of the spheres.

data loop: ultrasonic sensor -> MaxMsp (sound) -> OSC protocol -> Unity (image)

Arduino ultrasonic sensor detects the position of the user

Data is collected and transformed in the Arduino code

Data is received, unpacked and transformed in the Max Msp loop, creates sound according to data

Data is received, unpacked and transformed in the Max Msp loop, creates sound according to data

Data is sent from Max Msp to Unity by the OSC protocol, external c# plugins in Unity receive the data and transform objects in the scene

Data is sent from Max Msp to Unity by the OSC protocol, external c# plugins in Unity receive the data and transform objects in the scene

______________________________________________________________________________________

UNITY SCENE

I wanted to create a dark game scene, that is lighted up by the environment. I also wanted to play with Unity modules. For that, I created a Shader Graph Material. I used also the Particle System to make glowing modules.