No edit summary |

No edit summary |

||

| (4 intermediate revisions by the same user not shown) | |||

| Line 22: | Line 22: | ||

[[File:pdseq.PNG|PD-Proto|600px]] | [[File:pdseq.PNG|PD-Proto|600px]] | ||

|- | |- | ||

| Line 30: | Line 30: | ||

<br/> | <br/> | ||

<br/> | <br/> | ||

INTRODUCTION | |||

In the course "Acoustic Interfaces" we wrote a C++ Machine-Learning library based on a K-Clustering Algorithm. It's purpose is to classify Microphone Inputs into different frequency based classes. After this cluster (training) process the algorithm is able to classify similiar microphone input in realtime. The algorithm is running on a teensy 4.0 with an audio shield. | In the course "Acoustic Interfaces" we wrote a C++ Machine-Learning library based on a K-Clustering Algorithm. It's purpose is to classify Microphone Inputs into different frequency based classes. After this cluster (training) process the algorithm is able to classify similiar microphone input in realtime. The algorithm is running on a teensy 4.0 with an audio shield. | ||

I have to say, that it was quite hard for me to come up with an idea for the creative, musical use of this technique. As i dont wanted to implement this algorithm for the classic purpose of sound detection issues, i came up with a more abstract idea of use. | I have to say, that it was quite hard for me to come up with an idea for the creative, musical use of this technique. As i dont wanted to implement this algorithm for the classic purpose of sound detection issues, i came up with a more abstract idea of use. | ||

IDEA | |||

My idea was a intention based gesture/timbre detection through the piezo microphone input. This input is expressed through Midi Notes and muscial scales. For example the aggresive touch on the interface surface should express in a, somehow, corresponding sequence of notes (or melody). | My idea was a intention based gesture/timbre detection through the piezo microphone input. This input is expressed through Midi Notes and muscial scales. For example the aggresive touch on the interface surface should express in a, somehow, corresponding sequence of notes (or melody). | ||

| Line 38: | Line 42: | ||

So the project i came up with is basically a sequencer. | So the project i came up with is basically a sequencer. | ||

In | INSPIRATION | ||

In thought about what kind of sequencer i wanted to build, i was very inspired by the eurorack-sequencer by "Make Noise", called "Rene´ ". It's a sequencer based on motion inside a cartesian coordinate system. What i found interesting in this, is the fact that this way of sequencing doesn't work with one main tempo which is driving the sequences, but instead with more tempos (depending on the amount of dimensions). Each tempo or clock is driving the sequences only on one axis. That means when clocks (or gates) of different speeds (or ryhtms) are fed into the sequencer, the outcoming sequence can be very diverse. With an normal( 1-dimensional) sequencer, such or similiar results from only one sequence would need modulation of pitch values or radomnization in the rhythm patterns. | |||

AUDIENCE | |||

The audience this project is aiming at musicians that are using analog synthesizers. Especially at artists that use their modular/analog gear for live and improvisation performances, because the idea of the intentional interface is clearly standing for the spontaneous and imediate creation of melodies and patterns. | |||

APPROACH | |||

So i decided to design my own little grid-based sequencer. Im calling it grid-based because it only has 2 dimensions. My plan was to build it in a hardware format, with analog parts. That means i didn't wanted to build a Midi-Sequencer but instead a digital CV/Gate-sequencer for analog gear. For the UI Part i was inspired by the Teensy-based, opensource eurorack module "Ornament & Crime" by Patrick Dowling (aka pld), Max Stadler (aka mxmxmx) and Tim Churches (aka bennelong.bicyclist). This one is known for its many functions and flexibility. But the whole UI is based on an OLED Display, two rotary encoders and 2 buttons. I planned to do it similliar. That means also an OLED display and 2 rotary encoders but only one button. Concercing Analog In and Outputs i build two audio inputs for the 2 clocks or gates that drive the X-Axis and the Y-Axis. On the output side i integrated two outputs (0-4 Volts = Range of 4 Octaves) with external 12 Bit DAC Boards to get a proper resolution, voltage range and precision. One outputs the CV signal, the other one the Gate signals. | So i decided to design my own little grid-based sequencer. Im calling it grid-based because it only has 2 dimensions. My plan was to build it in a hardware format, with analog parts. That means i didn't wanted to build a Midi-Sequencer but instead a digital CV/Gate-sequencer for analog gear. For the UI Part i was inspired by the Teensy-based, opensource eurorack module "Ornament & Crime" by Patrick Dowling (aka pld), Max Stadler (aka mxmxmx) and Tim Churches (aka bennelong.bicyclist). This one is known for its many functions and flexibility. But the whole UI is based on an OLED Display, two rotary encoders and 2 buttons. I planned to do it similliar. That means also an OLED display and 2 rotary encoders but only one button. Concercing Analog In and Outputs i build two audio inputs for the 2 clocks or gates that drive the X-Axis and the Y-Axis. On the output side i integrated two outputs (0-4 Volts = Range of 4 Octaves) with external 12 Bit DAC Boards to get a proper resolution, voltage range and precision. One outputs the CV signal, the other one the Gate signals. | ||

PROCESS OF WORK | |||

To the process of building and coding this project i can honestly say i've learned a lot, but also that i heavily underestimated the work and knowledge that was needed. | To the process of building and coding this project i can honestly say i've learned a lot, but also that i heavily underestimated the work and knowledge that was needed. | ||

Unfortunately i got to face many problems in the coding of the sequencer library, some are minor bugs, some are stability issues, other are hardware debouncing issues. This and also the lack of knowledge | Unfortunately i got to face many problems in the coding of the sequencer library, some are minor bugs, some are stability issues, other are hardware debouncing issues. This and also the lack of knowledge of programming in C++ lead to the fact that the actual circuit and algorithm i build/wrote for the teensy can't do yet what my initial idea was. To be concrete - | ||

- the connection to our ML-Library isn't there yet and therefore the whole "intentional interface" is missing | |||

- the maximum size of the grid is 4, that means 16 steps (i wanted to make it flexible so that the grid size can be chosen) | |||

- i wasnt able to debounce the rotary encoders enough for acceptable results (so i replaced them by 3 usual potentiometers), that makes the menu diving rather impractical | - i wasnt able to debounce the rotary encoders enough for acceptable results (so i replaced them by 3 usual potentiometers), that makes the menu diving rather impractical | ||

- the quantizer part is missing, so it outputs just all 12 semitones per octave | - the quantizer part is missing, so it outputs just all 12 semitones per octave | ||

| Line 68: | Line 80: | ||

- randomness fx | - randomness fx | ||

REFLECTION | |||

I think at the end i have to say that its quite hard to define a fixed mapping for the representation of the still very abstract data the ml-algorithm is giving at this point. The direct frequency band mapping of the gestures captured by the piezo mic onto the whole sequence has also a kind of random character. As well as the direct mapping of certain frequency points onto certain steps gives a very randomly sounding result. The intention, intensity or timbre can not really be heard by the corresponding melody changes. | I think at the end i have to say that its quite hard to define a fixed mapping for the representation of the still very abstract data the ml-algorithm is giving at this point. The direct frequency band mapping of the gestures captured by the piezo mic onto the whole sequence has also a kind of random character. As well as the direct mapping of certain frequency points onto certain steps gives a very randomly sounding result. The intention, intensity or timbre can not really be heard by the corresponding melody changes. | ||

CONCLUSION | |||

I consider my idea to map the raw fft-data from the teensy audio input onto note values as kind of a bad idea, but i think the mapping of the classification results onto musical scales has some potential. I guess not really in a fixed manner, but maybe if it would be able for the user to easily customize the connections between the different gestures and materials on the interface with its own musical interpretations. This would need a very sophisticated UI system and a very open algorithm structure for making it practical for users. | I consider my idea to map the raw fft-data from the teensy audio input onto note values as kind of a bad idea, but i think the mapping of the classification results onto musical scales has some potential. I guess not really in a fixed manner, but maybe if it would be able for the user to easily customize the connections between the different gestures and materials on the interface with its own musical interpretations. This would need a very sophisticated UI system and a very open algorithm structure for making it practical for users. | ||

Latest revision as of 11:43, 2 September 2020

Acoustic Interfaces

Instructor: Clemens Wegener

Credits: 6 ECTS, 3 SWS

Project by Joel Schaefer

INTRODUCTION

In the course "Acoustic Interfaces" we wrote a C++ Machine-Learning library based on a K-Clustering Algorithm. It's purpose is to classify Microphone Inputs into different frequency based classes. After this cluster (training) process the algorithm is able to classify similiar microphone input in realtime. The algorithm is running on a teensy 4.0 with an audio shield.

I have to say, that it was quite hard for me to come up with an idea for the creative, musical use of this technique. As i dont wanted to implement this algorithm for the classic purpose of sound detection issues, i came up with a more abstract idea of use.

IDEA

My idea was a intention based gesture/timbre detection through the piezo microphone input. This input is expressed through Midi Notes and muscial scales. For example the aggresive touch on the interface surface should express in a, somehow, corresponding sequence of notes (or melody).

So the project i came up with is basically a sequencer.

INSPIRATION

In thought about what kind of sequencer i wanted to build, i was very inspired by the eurorack-sequencer by "Make Noise", called "Rene´ ". It's a sequencer based on motion inside a cartesian coordinate system. What i found interesting in this, is the fact that this way of sequencing doesn't work with one main tempo which is driving the sequences, but instead with more tempos (depending on the amount of dimensions). Each tempo or clock is driving the sequences only on one axis. That means when clocks (or gates) of different speeds (or ryhtms) are fed into the sequencer, the outcoming sequence can be very diverse. With an normal( 1-dimensional) sequencer, such or similiar results from only one sequence would need modulation of pitch values or radomnization in the rhythm patterns.

AUDIENCE

The audience this project is aiming at musicians that are using analog synthesizers. Especially at artists that use their modular/analog gear for live and improvisation performances, because the idea of the intentional interface is clearly standing for the spontaneous and imediate creation of melodies and patterns.

APPROACH

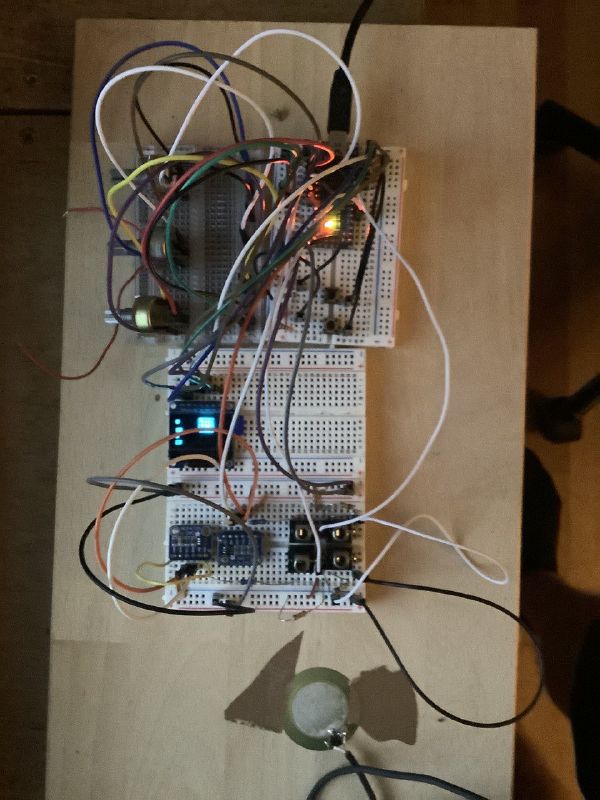

So i decided to design my own little grid-based sequencer. Im calling it grid-based because it only has 2 dimensions. My plan was to build it in a hardware format, with analog parts. That means i didn't wanted to build a Midi-Sequencer but instead a digital CV/Gate-sequencer for analog gear. For the UI Part i was inspired by the Teensy-based, opensource eurorack module "Ornament & Crime" by Patrick Dowling (aka pld), Max Stadler (aka mxmxmx) and Tim Churches (aka bennelong.bicyclist). This one is known for its many functions and flexibility. But the whole UI is based on an OLED Display, two rotary encoders and 2 buttons. I planned to do it similliar. That means also an OLED display and 2 rotary encoders but only one button. Concercing Analog In and Outputs i build two audio inputs for the 2 clocks or gates that drive the X-Axis and the Y-Axis. On the output side i integrated two outputs (0-4 Volts = Range of 4 Octaves) with external 12 Bit DAC Boards to get a proper resolution, voltage range and precision. One outputs the CV signal, the other one the Gate signals.

PROCESS OF WORK

To the process of building and coding this project i can honestly say i've learned a lot, but also that i heavily underestimated the work and knowledge that was needed. Unfortunately i got to face many problems in the coding of the sequencer library, some are minor bugs, some are stability issues, other are hardware debouncing issues. This and also the lack of knowledge of programming in C++ lead to the fact that the actual circuit and algorithm i build/wrote for the teensy can't do yet what my initial idea was. To be concrete - - the connection to our ML-Library isn't there yet and therefore the whole "intentional interface" is missing - the maximum size of the grid is 4, that means 16 steps (i wanted to make it flexible so that the grid size can be chosen) - i wasnt able to debounce the rotary encoders enough for acceptable results (so i replaced them by 3 usual potentiometers), that makes the menu diving rather impractical - the quantizer part is missing, so it outputs just all 12 semitones per octave - extra functions like playback direction, randomnization features, etc. are missing - overall running stability isn't good

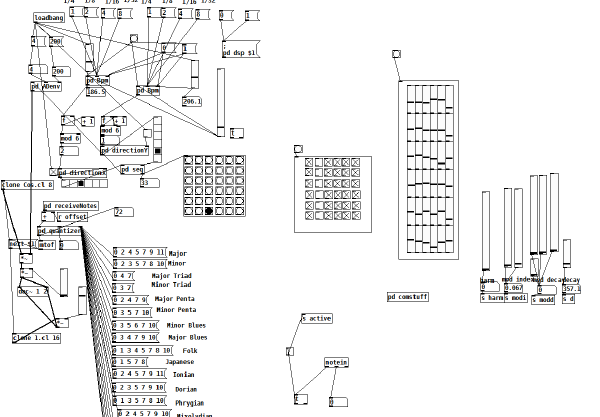

In principle the algorithm is working as can be seen in the 2nd video at the top, but i would have needed much more time during the semester to get closer to what i wanted. So i decided to demonstrate my project kind of theoretically, in the sense that im using my PureData Patch to show a shortened version of my idea. ( Video 1 above is a musical demonstration) In that version most is working as imagined.

There is: - 6x6 Grid - Note Mapping from Teensy Serial Stream - Quantizer with a lot of musical Scales - ability to change playback direction of both axis separately

Whats missing is: - a good musical representation of sound timbres clustered by the teensy library - Mapping of clustered classes onto musical scales - flexible grid size - randomness fx

REFLECTION

I think at the end i have to say that its quite hard to define a fixed mapping for the representation of the still very abstract data the ml-algorithm is giving at this point. The direct frequency band mapping of the gestures captured by the piezo mic onto the whole sequence has also a kind of random character. As well as the direct mapping of certain frequency points onto certain steps gives a very randomly sounding result. The intention, intensity or timbre can not really be heard by the corresponding melody changes.

CONCLUSION

I consider my idea to map the raw fft-data from the teensy audio input onto note values as kind of a bad idea, but i think the mapping of the classification results onto musical scales has some potential. I guess not really in a fixed manner, but maybe if it would be able for the user to easily customize the connections between the different gestures and materials on the interface with its own musical interpretations. This would need a very sophisticated UI system and a very open algorithm structure for making it practical for users.