No edit summary |

No edit summary |

||

| (4 intermediate revisions by the same user not shown) | |||

| Line 19: | Line 19: | ||

== Diagram: == | == Diagram: == | ||

[[File: | [[File:DiagramGroupLab.jpg]] | ||

== Implementation: == | == Implementation: == | ||

| Line 70: | Line 70: | ||

Our group's rhythm was based on the visualization and audio mentioned above, another Maxmsp patch was programmed to collect the sound from Di's laptop, Kan's physics and environment around to play in loop, and you can just simply press the space bar in Shuyan's laptop to create and add new rhythms into the melody. Inside this patch, ASCII code(32 which represent space bar in our case) was used to define the key to be pressed to record new sound. | Our group's rhythm was based on the visualization and audio mentioned above, another Maxmsp patch was programmed to collect the sound from Di's laptop, Kan's physics and environment around to play in loop, and you can just simply press the space bar in Shuyan's laptop to create and add new rhythms into the melody. Inside this patch, ASCII code(32 which represent space bar in our case) was used to define the key to be pressed to record new sound. | ||

Under the | Under the cooperation between visualization and audio in both laptops and physics, we can just simply use one mobile phone, iPad or any other mobile device to create and play with your own rhythms melody. You are gonna have a user experience in interface, visualization and audio generated from physics output. Enjoy it! | ||

[[File:MaxmspShuyan.jpg]] | [[File:MaxmspShuyan.jpg]] | ||

[[File:OutputGroup.jpg]] | |||

'''Video Link''' | |||

https://www.youtube.com/watch?v=enNGtWU-nss&feature=youtu.be | |||

Latest revision as of 21:04, 31 May 2016

Play with Rhythms:

Group work By:

- Kan Feng: Processing visualization, Arduino, TouchOsc

- Di Yang: MaxMsp visualization

- Shuyan Chen: MaxMsp sound

-JiXiang Jiang: Documentation

Introduction:

In our group, we want to realize that graphics and physics can be outputs which perform the synchro feedback of visualization and sound reacting to the same controls in mobile way. And creating a performance that combines the visualization with the sound which will be rhythms that generated in sound card in laptops, physicals in arduino side and the recording of environmental sound.

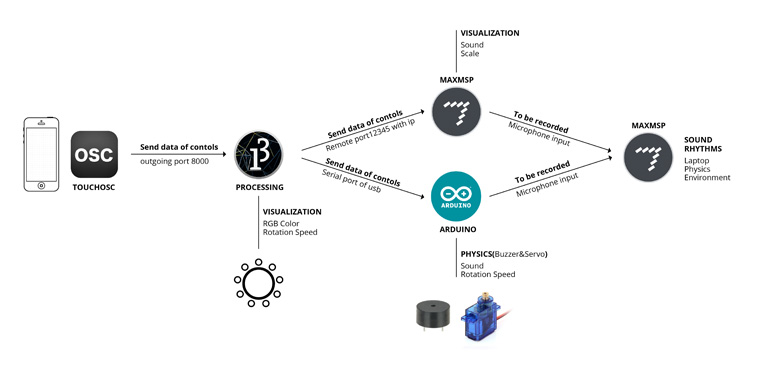

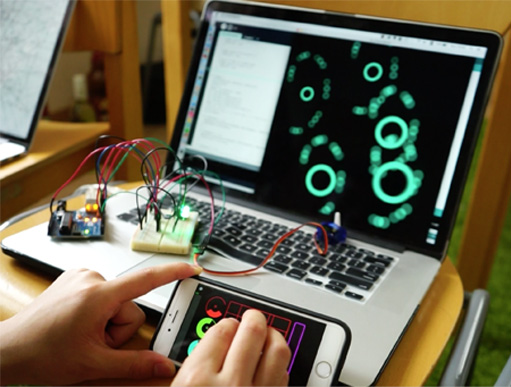

We were using TouchOsc as an input to send data of controls to Processing through osc messages, once received and analyzed by processing, then the data continues to be sent to Maxmsp in others' computer to control the visualization and sound in computers and also Arduino side to control different physicals which can generate different sounds. Hence, we can use the same controls in our mobile phones to achieve the same parameters which create the visual and audio interaction in both graphical and physical way.

Diagram:

Implementation:

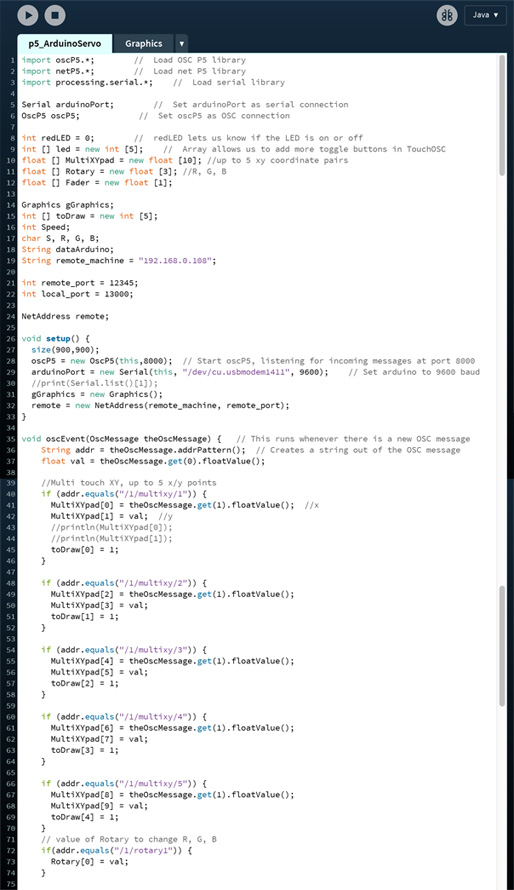

1.Input

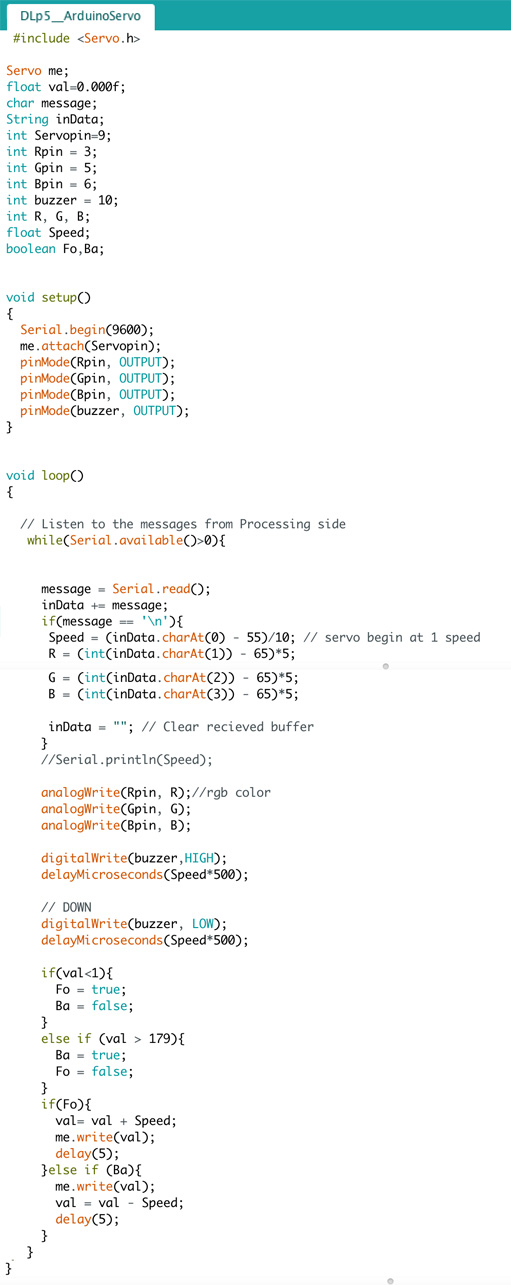

As the main input interface, the layout of TouchOsc was designed to control three key elements: RGB color, Position and Speed. And the layout, color, value range, ID etc. of all these controls can be modified in TouchOsc Editor in computers

RGB color(Rotary): value range from 0 to 255; rotation controlling; responsible for the color of visualization in Processing and RGB Led in Arduino.

Position(Multi-xy pad): x, y value range from 0 to 900 which matches the window size in processing; multi-touch controlling; responsible for the position and amounts of visualization in processing and the sound effect in Maxmsp visualization.

Speed(Fader): value range from 0 to 0.15 which matches the algorithm in processing; slide controlling; responsible for the rotation speed of Processing visualization, Arduino servo and audio of Arduino buzzer.

2. Message Communication

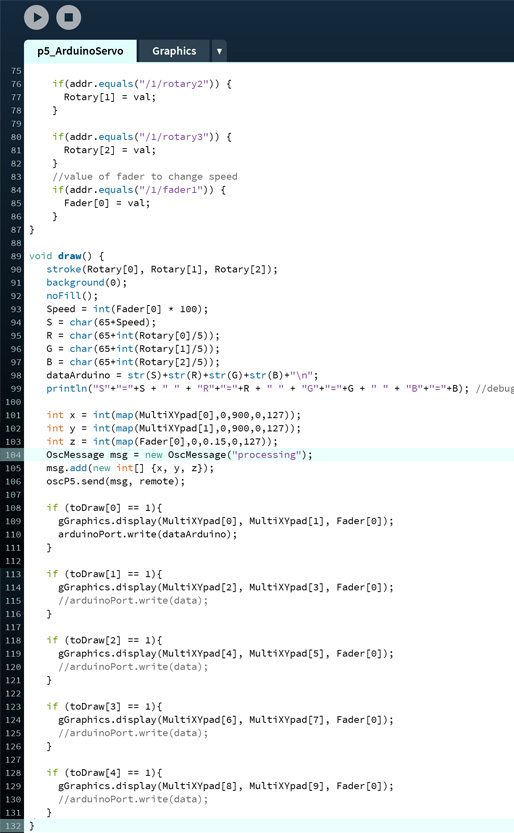

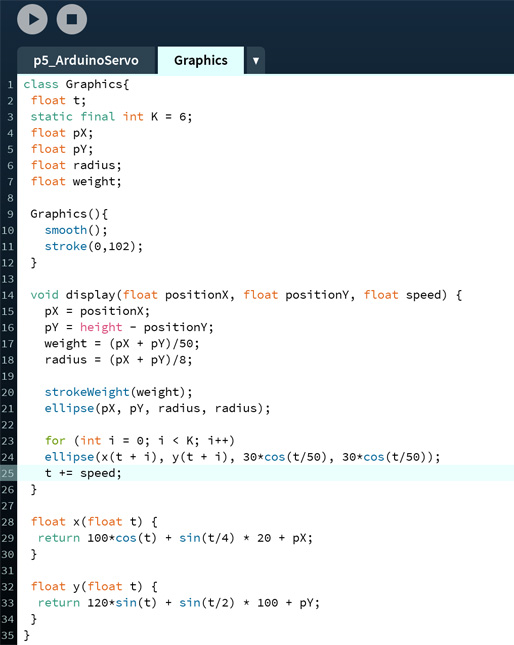

Processing: As a gate to receive the data from those controls, Processing in Kan's laptop was used to create a testing visualization reacting to the color, position, amounts and rotation speed information sent from controls in TouchOsc, nevertheless, it plays the key role to send these messages to Arduino with OSC messages through serial port in Kan's side and Maxmsp with OSC messages through UDP to Di's laptop simultaneously.

Arduino:

Servo, Buzzer and RGB Led were used as physics to read the data of controls in TouchOsc sent from Processing, and due to the variables of RGB Rotary and Speed Fader, the color of RGB Led and sound of Servo(generated from rotation speed) and Buzzer react synchronously.

MaxMsp:

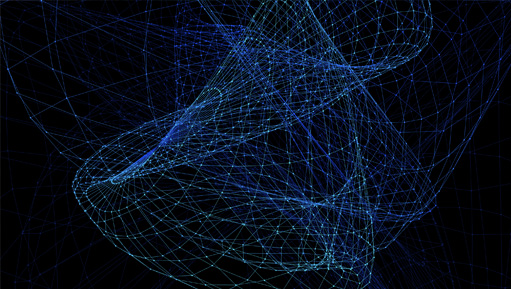

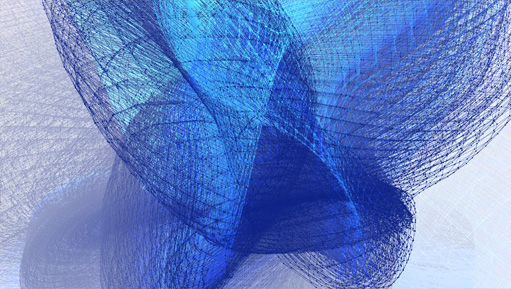

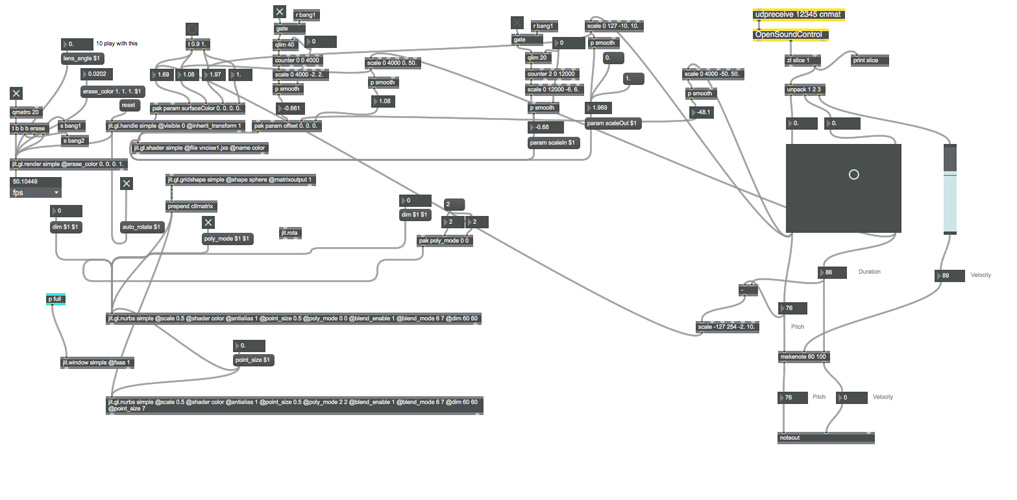

To create a gorgeous visualization, shader and noise rendering was used in Maxmsp patch, which was planned to be our main visualization as in a VJ performance, cause what's more interesting is that it can be controlled by the RGB Rotary and position controls in TouchOsc smoothly but surprisingly at the same time. UDPreceive is the key point to listen to the messages sent from processing, 12345 is the same remote port needs to be set in both Processing and Maxmsp, hence with the protocol of CNMAT created by cnmat, the communication works between Processing and Maxmsp.

3. Output

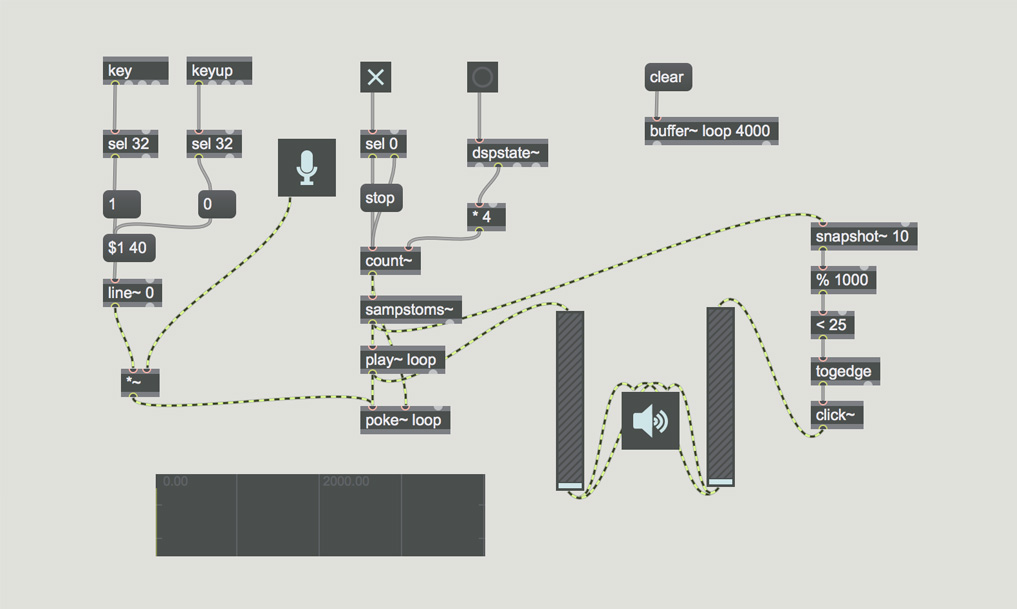

Our group's rhythm was based on the visualization and audio mentioned above, another Maxmsp patch was programmed to collect the sound from Di's laptop, Kan's physics and environment around to play in loop, and you can just simply press the space bar in Shuyan's laptop to create and add new rhythms into the melody. Inside this patch, ASCII code(32 which represent space bar in our case) was used to define the key to be pressed to record new sound.

Under the cooperation between visualization and audio in both laptops and physics, we can just simply use one mobile phone, iPad or any other mobile device to create and play with your own rhythms melody. You are gonna have a user experience in interface, visualization and audio generated from physics output. Enjoy it!

Video Link https://www.youtube.com/watch?v=enNGtWU-nss&feature=youtu.be