| Line 23: | Line 23: | ||

I would like to use an Arduino pulse sensor to create an interactive installation. The setup contains the pulse sensor and a screen. The user can easily connect to the Max by clipping the Arduino sensor to its finger. The webcam is connected and the screen shows a pulsating and distorted image of the participant. The pulse sensor visually stretches the webcam image, moves in rhythm of the pulse and creates a new result. | I would like to use an Arduino pulse sensor to create an interactive installation. The setup contains the pulse sensor and a screen. The user can easily connect to the Max by clipping the Arduino sensor to its finger. The webcam is connected and the screen shows a pulsating and distorted image of the participant. The pulse sensor visually stretches the webcam image, moves in rhythm of the pulse and creates a new result. | ||

A more complex version would be the interaction of two pulses. Two Arduino pulse sensors are connected to | A more complex version would be the interaction of two pulses. Two Arduino pulse sensors are connected to Max and one distorted webcam image is showing the combination of the pulses from two people. This idea could be further developed during the semester. | ||

How does it work: Arduino pulse sensor that measures the pulse with the fingers. Usually, this is used to measure the radial pulse. In MAX, a patch must be created in which the data from Arduino is converted into parameters which distorts the image. | How does it work: Arduino pulse sensor that measures the pulse with the fingers. Usually, this is used to measure the radial pulse. In MAX, a patch must be created in which the data from Arduino is converted into parameters which distorts the image. | ||

| Line 101: | Line 101: | ||

{{#ev:youtube|OIjoACkDEIc600}} | {{#ev:youtube|OIjoACkDEIc600}} | ||

If the embedded file doesn't work above, please find here the link | If the embedded file doesn't work above, please find here the link: | ||

https://youtu.be/OIjoACkDEIc | https://youtu.be/OIjoACkDEIc | ||

| Line 114: | Line 114: | ||

[[File:Screenshot 2021-06-16 at 22.54.26.png|400px]] | [[File:Screenshot 2021-06-16 at 22.54.26.png|400px]] | ||

Everything worked and I generated snapshots according to the pulse sensor. Unfortunately, I was not happy with the result. I still had the last patch in my mind, where the snapshot is triggered by a sound. With this, I was able to move away from the computer. With the setup of the pulse sensor, I was glued to the screen, unable to move around. | |||

Revision as of 21:31, 16 June 2021

FIRST PROJECT THOUGHTS

Please take a look at Johannes Schneemann Project idea 1

This is a short summary of my thoughts. But I would like to develop further my final project idea.

What does the space we share online look like?

Participants in an online meeting share a virtual space without having the same circumstances. What circumstances influence a meeting in reality? Someone might be cold or hot, very noisy, the washing machine is running in the background, late at night or early in the morning with a time shift...

The shared virtual space should be represented by its own added member of the BBB conference displaying an interactive moving image. It is generated from data collected from all participants at the time of the meeting. For 5 participants, the parameters are: body temperature, room temperature, pulse, time, noise. This non-visual data is translated into visual images. The room we are sharing online gets another dimension.

I have to admit that it won’t be technically feasible in this short time (real time data exchange, embed in BBB, interactive visualisation)

FINAL PROJECT THOUGHTS: pulse — distorting live images

A normal pulse is regular in rhythm and strength. Sometimes it's higher, sometimes lower, depending on the situation and health condition.You rarely see it and feel it from time to time. Apart from the visualisation of an EKG, what could it look like? How can this vital rhythm be visualised?

I would like to use an Arduino pulse sensor to create an interactive installation. The setup contains the pulse sensor and a screen. The user can easily connect to the Max by clipping the Arduino sensor to its finger. The webcam is connected and the screen shows a pulsating and distorted image of the participant. The pulse sensor visually stretches the webcam image, moves in rhythm of the pulse and creates a new result.

A more complex version would be the interaction of two pulses. Two Arduino pulse sensors are connected to Max and one distorted webcam image is showing the combination of the pulses from two people. This idea could be further developed during the semester.

How does it work: Arduino pulse sensor that measures the pulse with the fingers. Usually, this is used to measure the radial pulse. In MAX, a patch must be created in which the data from Arduino is converted into parameters which distorts the image.

Moodboard

Since I want to set up a user-friendly installation and I failed building a pulse sensor with the equipment I have at home, I ordered one online.

03.06.2021 sensor arrived / try and error

I connected the pulse sensor to Max and had some troubles on my way there.

As the sensor is sensitive to light, I came up with the idea to put it inside a box. I seems to make a difference, but I am not sure.

I used similar objects from the "sensing physical parameters" patch and an Arduino code I found online.

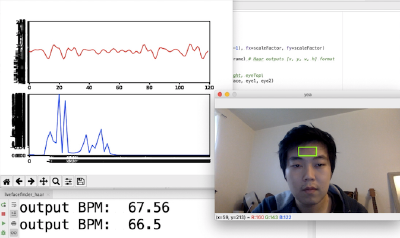

I have no clue how to connect the input from the sensor to the webcam image. I am searching online, but I haven’t found good references / tutorial how to distort a live image with specific data input. I would be very happy for any hint. During the research, I found the method of using the webcam as the tool to measure your heart rate. I think this would be way more interesting!! [1] Using the webcam as the sensor and for creating the visuals as well.

What I am imagining right now, but don’t know where to start is the following: The visitor is coming closer to the screen, seeing itself inside the webcam. The pulse gets identified by the webcam and the human is recognized as well, (ideally turns red-ish; opacitiy 50%; maybe by using the cellblock object?) The pixels of the human stretches to the rhythm of the pulse.

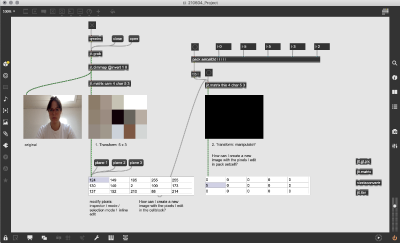

04.06.2021 manipulate webcam image by pixels

Please find here the patch: File:210604_Project.maxpat

I can't figure out how to create a new image / video from the edited cellblock (the edited pixels). Do you have a tutorial or references for that? I am wondering if a command which manipulates the whole image - not individual pixels - would be interesting. Probably that's why you recommended jit.gl.pix, but I can't make it work.

10.06.2021 exploring effects

update from the pixel manipulation

I was not so happy with the pixel manipulation. Online, I found an effect that I really like. It’s used for motion tracking. If the object is still, you don't see it. If it moves, you can hardly see the outline. If it moves faster, you can see more and more.

I simply changed the color of the image with jit.unpack and jit.pack. For capturing a snapshot, I used the microphone which is triggered by snapping fingers or clapping hands. Every snapshot should be saved directly, but somehow this didn’t work. You can save it manually right now.

Here is the patch File:210609_Moving Fast.maxpat and some screenshots

16.06.2021

If the embedded file doesn't work above, please find here the link: https://youtu.be/OIjoACkDEIc

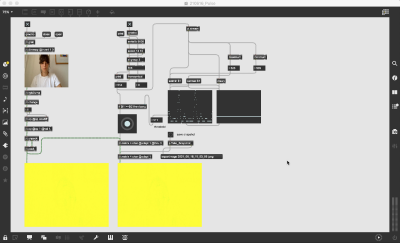

Before connecting the pulse sensor to Max, I first tried to simplify it with "mousestate". After that, I just had to connect the Arduino again.

Please find here the patches:

- Mousestate File:210613_Mousestate.maxpat

- Pulse Sensor File:210616_Pulse.maxpat

Everything worked and I generated snapshots according to the pulse sensor. Unfortunately, I was not happy with the result. I still had the last patch in my mind, where the snapshot is triggered by a sound. With this, I was able to move away from the computer. With the setup of the pulse sensor, I was glued to the screen, unable to move around.