Semster Project : Chicken Tracker

We humans communicate. At work, with friends, colleagues and with relatives. To classify the messages communicated to us, we use our senses. In direct verbal communication, our auditory perception is primarily engaged. Peripherally one could think the acoustic channel is clear and unmistakable. What may be simply true in individual cases, however, reveals itself in everyday life as a diverse, complex act. Human communication and perception is multi-layered. Besides the acoustic channel we use optical, olfactory as well as tactile stimuli to communicate with our fellow human being. What happens to the understanding of our counterparts when visual communication is restricted, feeling and smelling are even completely eliminated, and when eye contact, facial expressions and gestures are distorted by the Internet?

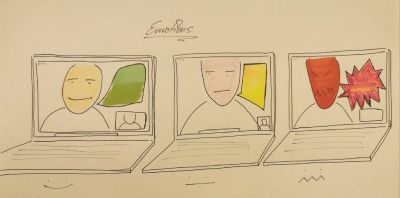

The current pandemic and the associated lockdown limit verbal, interpersonal exchange to two elementary channels. Acoustic communication and visual communication made possible by video telephony are coming to the fore in our everyday lives. However, contact via the medium of the Internet lacks the possibility of expressing empathy and feelings. For this purpose I have invented an „Chicken Tracker". As a support tool to the usual video chat, the „Chicken Tracker" is supposed to visually amplify a feeling. The basic idea is simple. Participant A starts talking, initially appears completely realistic in the video chat - if A talks himself into a rage, the volume of his acoustic output increases steadily. The amplified signal in turn turns his skin color increasingly red through the emotion tracker - until A appears completely red. Once in a rage, the volume of A's output increases until it collapses and A turns into a chicken for the time in which the volume is higher than X. The whole idea is about loosening up in online discussions and not taking yourself too seriously.

The project has several stages of development. First, I wanted to change certain colours in Max video - using grab. Therefore I build a patch exchanging bright / dark colours by a matrix.

Next steps will be tracking human skin color by RAL-Code:

Source: https://colorswall.com/palette/2513/)

Source: https://colorswall.com/palette/2513/)

Changing color by sound: -

Changing skin color to red by volume: -

Changing picture: grab(video cam) to chicken by max vol. -

connecting patch to zoom

"Greenscreen"

1. try 2 videos in 1 patch - goal will be they run mixed into each other

https://www.studioroosegaarde.net/info https://www.studioroosegaarde.net/project/space-waste-lab