Source :

Ryan Aylward and Joseph A. Paradiso. 2007. A compact, high-speed, wearable sensor network for biomotion capture and interactive media. In Proceedings of the 6th international conference on Information processing in sensor networks (IPSN ’07). ACM, New York, NY, USA, 380-389. DOI=http://dx.doi.org/10.1145/1236360.1236408

Summary :

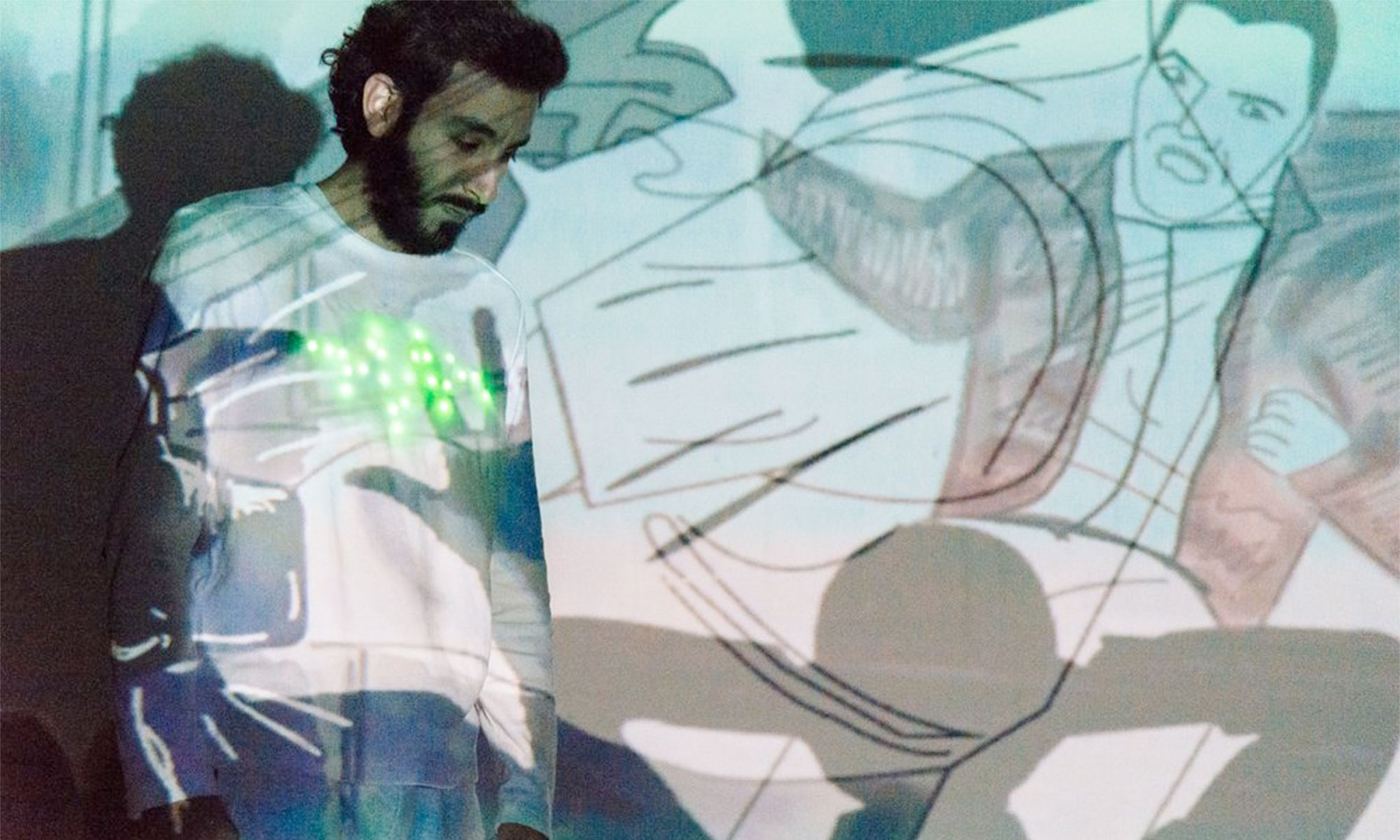

The Article, „A Compact, High-Speed, Wearable Sensor Network for Biomotion Capture and Interacctive Media“, is about a wearable sensor technology for multiple dancers (and professional athlets). Therefore it is important to achieve low latency and high resolution as well! As well, it is important to keep battery life low and the wearable wearable. Interesting is to see, whether it is possible to receive information, a video motion technique is not able to capture. The article shows in detail sensor strategies, the different wireless platforms and several hardware-details. Interesting is that they use the nRF2401A, which Lucas is going to check out next week. In Feature extraction, they rather focus on the influences of dancer to dancer and group dynamic. A problem ist, that there are so many ways, one could analyse interpret one dancer, that it gets even harder to find a clean mapping with a group of dancers. To convert the collected data into sound or video, they simply record it and playback it several times into Max/MSP, to find good mappings. To sum up, they found a way to collect low latency, high resolution data technically, only the interpretation and meaningful output could be improved.

Relevance for our project :

It looks like the nRF2401A could be quite useful for our project and it seems like their technology, could be helpful, whenever we have problems at some stage between input and output. Even though I don’t think, we should start with a dance ensemble. We should better focus on one actor. We should consider using Max/MSP to manipulate audio maybe.