[[1]]

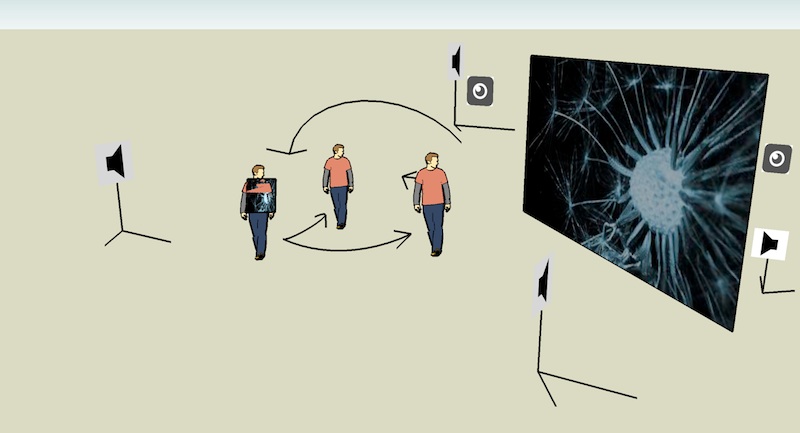

Sketch of “Synapse”

How comes the result if there has no sense of communication between human being? Moreover, If people lose his(her) feeling about physiological sense? And if that so, maybe in the future, how could we communicate, convey or perceive the message? I assume that we all are the individuals but also could be connected units; we do all actions and send the message by trying to connect other ones. Even thought we could be others as well, after transforming into others, we could read, realize, and reconstruct them. If we stand in the same area(or not, the n we will try to find others), we will build a well-connected, unity world; not only demonstrate outside, but the whole inner construction.

- In this project, I plan to build an original circumstance without light and sound. Each person will play one unit(I call “synapse” in this case) as a role. Once a unit came into, it startup the visual and auditory reaction, after more units coming, they could connect or reconstruct others by putting its information onto other bodies. More units come into this area, more visual, sound and connection will happen. The final result I expect will be a full dimension of reconstructed area which fill with different units(or all the same) in the complete neural network system.

Diagram

Visual Design

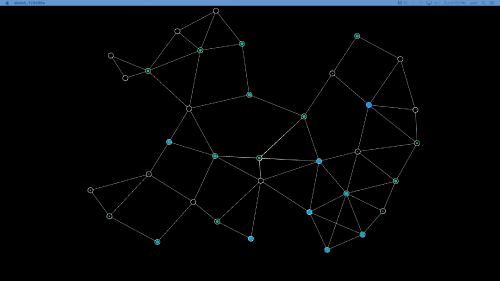

Neural System in Processing

This processing Sketch is based on Felix Faire's Neural Network-Neural Network

Each of the neurons can be charged with file(user press key "C" to fulfill the energy of the neuron which is as based element, and distribute to the other neurons to construct whole network). The following shows how user could operate this Neural system:

Key Commands

- hold "c" to charge neurons

- press "f" to toggle fade

- press "x" to clear sketch

Screen Shot

--

Sound Design

- Electronic Sound

-Max/Msp Patch-

For the part of electronic sound aspect, there were multiple FM(Frequency Modulation) and Oscillators presets. I used totally three modules thought this piece.

Beside of that, kinect camera also used in this project, when it catch the information of human(violin player myself) skeleton, they would be sent to sound patch via OSC. As a result, I could control the sound gesture such like frequency, volume and sampling size. Kinect detecting library could be downloaded here: Synapse for Kinect

- Spatial Sound System

In the same patch, I design a spatial sound system(currently for 4 channels speakers), which founded on " trigonometric function" method. When Sin. function multiple Cos. function, it will produced out radius of circle which map to the circle panel. User could either control the panning of sound manually, or press the toggle to trigger 360 degree surrounding panning effect automatically.

Whole sound patch could be downloaded here:

Sound Patch

- Violin Composition

To simulate the neural network system, I design a "Tone distribution System" for violin play.This system will use "G" tone as fundamental neuron cause it is the middle position of one octave(from C1 to C2). Then, from this tone as a motivation, there are multiple composed ways and special playing technique develop it and simulate as neural system, like semi-tone, microtonal, mirror and slide....etc. Total duration is about 2 minutes, there are four three paragraphs within the composition. Complete presentation demonstrate below.