PDF DOCUMENTATION + SOURCEFILES

https://www.dropbox.com/s/p78pr1y9687rda8/ozanakkoyun_of_docs.zip?dl=0

CONCEPT

The project’s idea is based on the topic for “Körper Raum Stadt” class where I wanted to investigate how the human body is interacting with digital and physical space, at the same time questioning if space can be defined by the human body (participant), light or both of them together. The goal was translating the physical gestures into virtual forces to process tracking data as well as generate geometries or planes right in front of the participant. The human body (defined as “body” rectangle) has a triggering role at the center and also considered as a light source which illuminates the planes around it while they are being generated.

MAKING OF

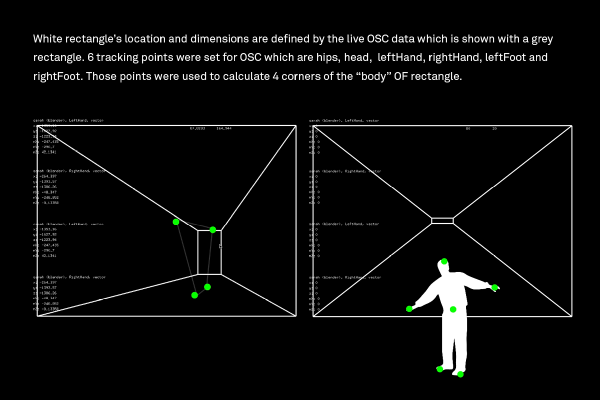

For the first setup, the OSC Data from motion tracking platform—where the head, the hips, the left and the right hand, the left foot, and right foot were set as six tracking points—was collected and transferred into Openframeworks platform. After that, those points were used to calculate the coordinates of the geometries that will be generated such as 4 points of the rectangle called “bodyRect” in the middle. There was a small drawback while using the tracking system and the video wall in DBL simultaneously. In the second setup, those body movements and tracking points were represented by the “mouseX” and “mouseY” positions.